Welcome to the next installment in our healthcare AI infrastructure series. If you’ve been following along, we’ve already explored the foundational components of healthcare AI in earlier posts, covering the overall AI lifecycle and diving deeper into AI development and implementation processes. Most recently, we focused on the technical underpinnings of AI development infrastructure and the broader healthcare IT landscape that makes modern AI models possible.

Healthcare AI Implementation Infrastructure

In this post, we shift gears to discuss the nuts and bolts of connecting AI models to real-world clinical workflows, an often overlooked but essential step in the AI lifecycle—implementation. While development gets a lot of attention, the success of any healthcare AI tool is ultimately determined by how well it integrates into existing health IT systems. As we explore the technical infrastructure needed to support these implementations, we’ll break down key concepts, such as internal versus external integration, and provide insights into how these choices shape AI deployments’ reliability, flexibility, and security.

A couple of notes before we start. Although this might not seem that important to the engineers who are on the AI research and development side of things, I would argue that understanding the downstream will not only increase your success of projects eventually making a clinical impact but also that there are exciting and cool research ideas that can come out of thinking about development. Although the implementation goes beyond the technology, this section primarily delves into the ‘nuts-and-bolts’ of the implementation step, known as technical integration. This is the process of connecting AI models to existing HIT systems.

By the end of this post, you’ll have a clearer understanding of the infrastructure choices that impact the successful implementation of healthcare AI models and how to navigate the complexities of this critical phase in the AI lifecycle. Our goal is to provide you with the knowledge and insights you need to make informed decisions in your healthcare AI projects.

Two Approaches to Implementation

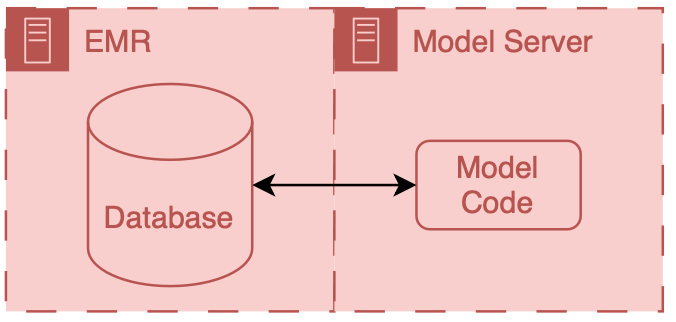

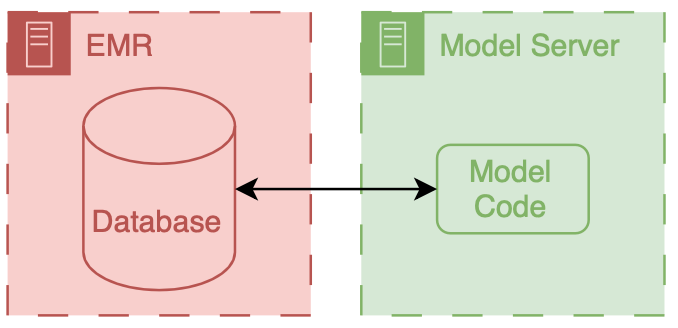

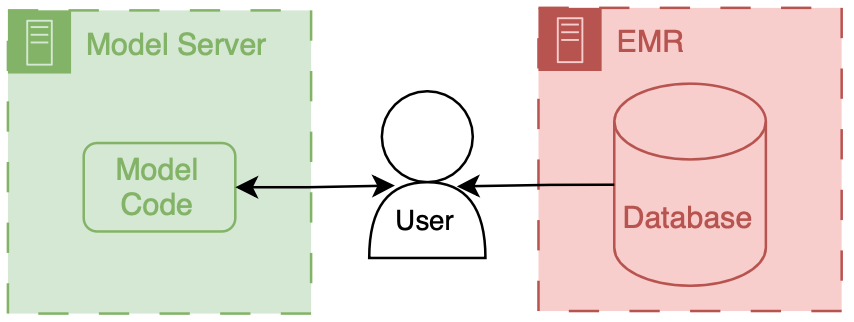

Before we delve into the details, it’s important to understand the two main approaches to integrating a model into health IT systems. These are categorized as internal or external based on their relationship to the EMR.

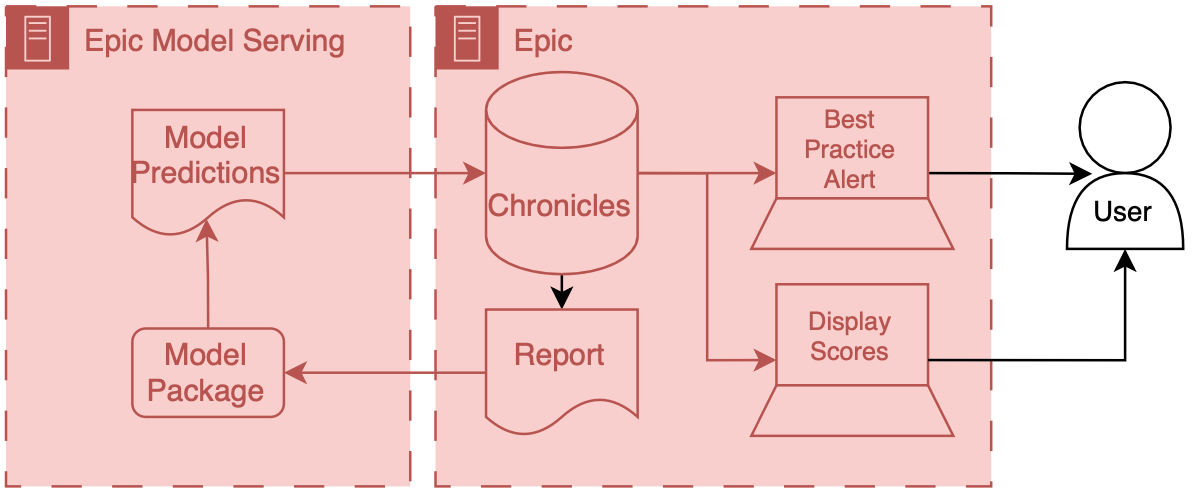

Internal integration of models means that developers rely exclusively on the tooling provided by the EMR vendor to host the model along with all of the logic around running it and filing the results.

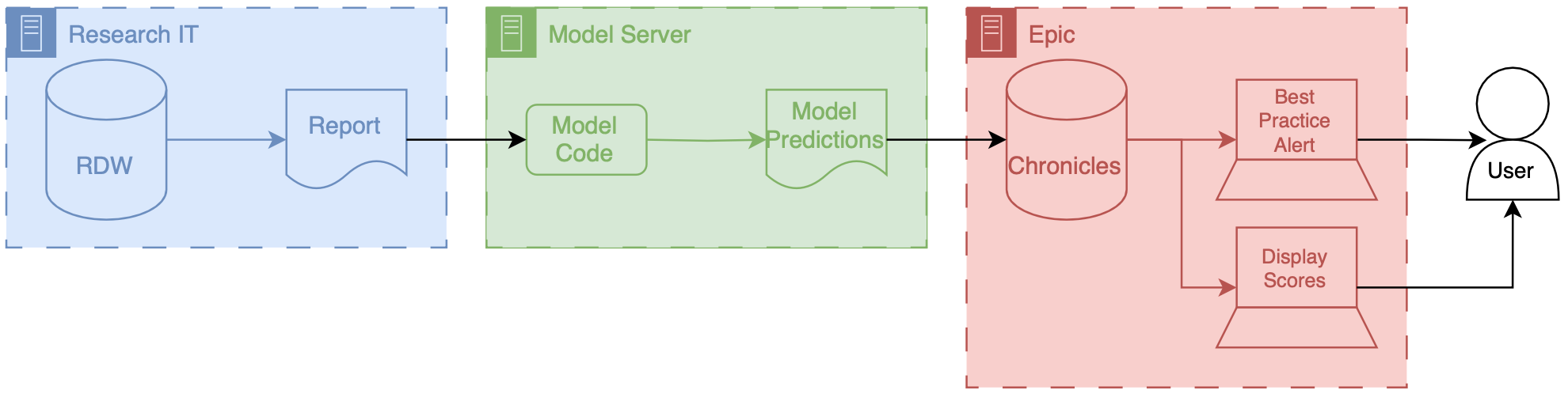

External integration of models means that developers choose to own some parts of the hosting, running, or filing (usually the hosting piece).

In both scenarios, data flows from the EMR database to the model. However, the path these data take can be drastically different, and significant thought should be put into the security of the data and the match between the infrastructure and the model’s capabilities.

It is important to note that these approaches delegate the display of model results to the EMR system. They do this by passing model results to the EMR and delegating user displays to existing EMR tools.

Internal Integration

The infrastructure choices for internal integration are pretty straightforward; they are all dictated by the EMR vendor, so you might not have any options. In the past, this would have meant re-programming your model to be called by code in the EMR (e.g., for Epic, you would need to have it be a custom MUMPS routine). Luckily, EMR vendors are now building out tools that enable (relatively) easy integration of models.

Limitations

However, some major restrictions exist because these servers are not totally under your control. Instead, they are platforms designed to safely and effectively support a myriad of clinical use cases. Thus, they have a couple of attributes that may be problematic.

The first attribute is sandboxing; the model code runs in a special environment with a pre-specified code library available. As long as you only use code from that library, your model code should function fine. However, you may run into significant issues if you have an additional dependence outside that library.

The second is conforming to existing software architectures. Expanding enterprise software often means grafting existing components together to create new functionality. For example, existing reporting functionality may be used as the starting point for an AI hosting application. While this makes sense (reporting gets you the input data for your model), you may be stuck with a framework that wasn’t explicitly designed for AI.

The sandboxing and working with existing design patterns means that square pegs (AI models) may need to be hammered into round holes (vendor infrastructure). Together, this means that you seed a significant amount of control and flexibility. While this could be viewed as procrustean, it forces AI models to adhere to specific standards and ensures a uniform data security floor.

External Integration

External integration offers the opposite approach. Model developers can choose how their model is hosted and operates and how it interfaces with the EMR. This flexibility is especially valuable for cutting-edge research. However, it also comes with the significant responsibility of ensuring that data is handled safely and securely.

External integration offers a path where innovation can meet clinical applications, allowing for a bespoke approach to deploying AI models. This flexibility, however, comes with its own set of challenges and responsibilities, particularly in the realms of security, interoperability, and sustainability of AI solutions.

Limitations

Below are key considerations and strategies for effective external integration of AI in healthcare:

- Security and Compliance When hosting AI models externally, ensuring the security of patient data and compliance with healthcare regulations such as HIPAA in the United States is paramount. It is essential to employ robust encryption methods for data in transit and at rest, implement strict access controls, and regularly conduct security audits and vulnerability assessments. Utilizing cloud services compliant with healthcare standards can mitigate some of these concerns, but diligent vendor assessment and continuous monitoring are required.

- Interoperability and Data Standards The AI model must interact with the EMR system to receive input data and return predictions. Adopting interoperability standards such as HL7 FHIR can facilitate this communication, enabling the AI system to parse and understand data from diverse EMR systems and ensuring that the AI-generated outputs are usable within the clinical workflow. An alternative is to use a data integration service like Redox.

- Scalability and Performance External AI solutions must be designed to scale efficiently with the usage demands of a healthcare organization. This includes considerations (that some may consider boring) for load balancing, high availability, and the ability to update the AI models without disrupting the clinical workflow. Performance metrics such as response time and accuracy under load should be continuously monitored to ensure that the AI integration does not negatively impact clinical operations.

- Support and Maintenance External AI solutions require a commitment to ongoing maintenance and support to address any issues, update models based on new data or clinical guidelines, and adapt to changes in the IT infrastructure. Establishing clear service level agreements (SLAs) with vendors or internal teams responsible for the AI solution is crucial to ensure timely support and updates.

Bonus: Another Approach to External “Integration”

Safety and security can be a significant obstacle to external integration. Setting up the necessary connections and infrastructure between the EMR and your system can consume most of your development time. If you wanted to avoid the complexity of full integration but still allow clinical users to interact with your model, you could offer them a secure online interface. In this setup, the users act as intermediaries, manually inputting and retrieving information from the model.

MDCalc follows this approach, offering numerous models for physicians to input data directly. These tools are handy in clinical practice but not integrated into the EMR.

If the amount of data your model uses is small (a handful of simple data elements), this could be a viable approach. If you don’t collect PHI/PII, you could set up your own MDCalc-like interface for your hosted model.

We won’t discuss this architecture in-depth, but it’s an exciting way to make tools directly for clinicians.

Parting Thoughts

In choosing between internal and external integration for healthcare AI models, you must weigh the benefits and limitations of each approach. Internal integration offers simplicity and security by operating within the confines of existing EMR systems, but this comes at the cost of flexibility. External integration provides greater control and customization, which can be invaluable for cutting-edge AI tools, but this flexibility comes with increased responsibility for security, compliance, and interoperability.

Ultimately, the decision between these approaches hinges on your specific needs—whether you’re focused on rapid deployment with minimal disruption or seeking a more customized, innovative solution that can push the boundaries of what’s possible.

Looking Ahead

The infrastructure supporting these systems must adapt as healthcare AI continues to evolve. With increasing pressures on health systems to integrate advanced AI solutions, understanding the nuances of technical integration will be critical for success. The ability to connect models to clinical workflows effectively, ensure data security, and maintain operational efficiency will separate successful AI projects from those that never make it to the bedside.

In the next few blog posts, I’ll dive deeper into some real-world applications and examples of how these concepts play out. I’ll explore our C. difficile infection model integration, the M-CURES system for COVID-19 deterioration, and best practices for technical integration testing. These case studies will provide more concrete examples of the challenges and solutions that arise when moving from theory to practice.

Whether you’re developing a new AI tool or preparing to integrate one into your health system, remember that implementation is not just about technology—it’s about aligning people, processes, and tools to ensure that AI improves patient care.

Cheers,

Erkin

Go ÖN Home