You’ve developed an AI model that could “revolutionize” patient care—but how do you ensure it truly impacts the clinical workflow and improves outcomes? This is where implementation becomes paramount.

Implementing Healthcare AI

This post builds off of a previous introduction to the healthcare AI lifecycle and a discussion on healthcare AI development. These are not necessary pre-reading, but they provide a good background for the main focus of this post: Implementation Implementation is the work of integrating and utilizing an AI model into clinical care.

This post will first cover key implementation steps and general challenges associated with implementing AI tools in healthcare.

Implementation Steps

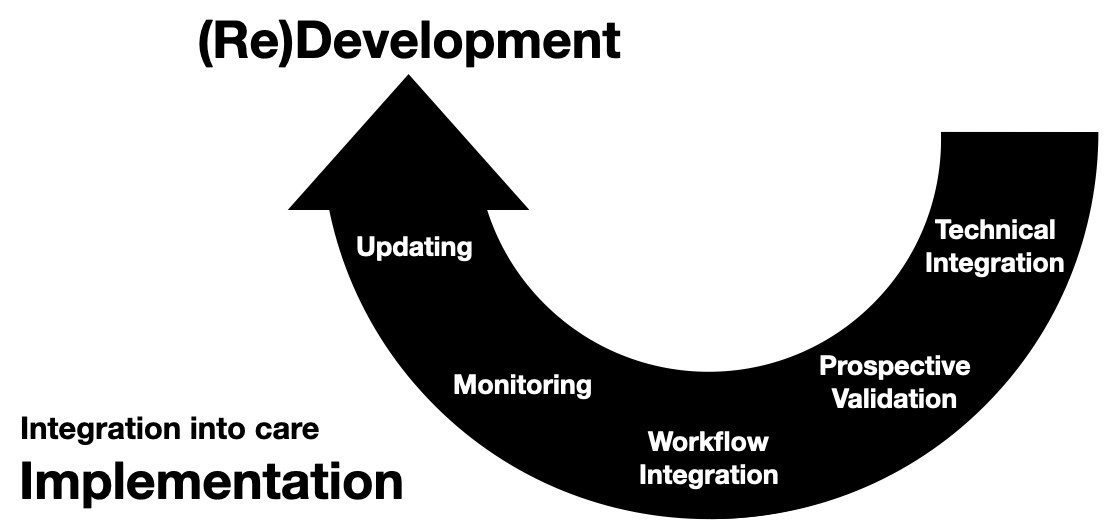

Like the development process, I break down implementation into five steps.

- Technical Integration

- Prospective Validation

- Workflow Integration

- Monitoring

- Updating There may be some non-linearity and blurring of these steps; however, implementation tends to work better the more structured this process is. That’s because there’s more “on the line” the further along the lifecycle you get. So, it’s best to be sure you’ve perfected a step before moving on to the next.

Technical Integration

Technical integration is the first step, and it involves getting the AI model to communicate with existing healthcare IT systems. Model developers will need to work closely with IT departments to ensure that data can flow smoothly (and securely) from care systems, such as electronic medical records (EMRs), to the AI model and back.

Significant effort will need to be expended at this stage. Although model developers and healthcare IT (HIT) administrators are technically inclined, they may need help working together initially due to their focus on different technologies and differing priorities. Thus, it can take a lot of work for a model developer to explain the needs of their model, and HIT administrators may need to expend additional effort to make their existing technology stacks compatible with AI models.

I’ll have several blog posts detailing some technical approaches, but it’s important to note that all of these steps are complex socio-technical processes. We should push to use a layer of technology standards, like FHIR, but we should also develop good governance and processes around technical integration.

Finally, technical integration must be conducted before assessing whether an AI model will work well for a given health system. That’s because the model’s performance isn’t truly known until it starts running in situ.

Prospective Validation

Prospective validation is the first high-fidelity test of the model. It’s about running the model in the real world but in a controlled manner. The aim is to see how the model performs with live data without directly impacting patient care. This step is critical for assessing the model’s readiness for full-scale implementation and identifying any unforeseen issues that might not have been apparent during development.

I recommend that all model developers and implementers aim to conduct a silent prospective validation, which is where the model’s predictions are tested in a live clinical data environment without affecting clinical decisions (scores/alerts are not shown to users).

Prospective validation is sometimes the only way to assess if your model development and technical integration worked correctly. We did a deep dive into an AI model we developed and implemented for the health system. This work is cataloged in the Mind the Performance Gap: Dataset Shift During Prospective Validation paper. In addition to discussing prospective validation, we uncovered a new type of dataset shift driven primarily by issues in our health IT infrastructure. The difference between the data our model saw during development and implementation environments caused a noticeable degradation in performance. So, we needed to rework our model and the technical integration to ameliorate this performance degradation.

Workflow Integration

Integrating an AI model into clinical workflows is more art than science. Fundamentally, you need to understand how healthcare professionals work, what information they seek, and when they need it. Ultimately, we want AI tools to fit into physician routines and not disrupt them.

The default has been to “alert” clinicians by sending a push of information as a page or pop-up (best practice alert). While these may have a place in some workflows, I find them particularly irksome in my daily practice as they tend to break my mental flow. We should push for other ways to integrate the outputs of AI tools into our workflows.

One approach might involve designing intuitive user interfaces for clinicians or setting up alert systems that are less disruptive but still provide actionable insights. For example, it would be great if there was a chat-like interface that enabled AI tools to ping me with messages that were previously BPAs. I could work through these messages and query the AI system with follow-up questions.

Monitoring

The job isn’t over once an AI model is up and running. Continuous monitoring ensures the model remains performant and relevant over time. This process involves tracking the model’s performance, identifying any drifts in accuracy, and being alert to changes in clinical practices that might affect how the model should be used.

Keeping track of basic model performance statistics, such as the number of alerts, sensitivity, and positive predictive value, can be challenging. That is because we need better infrastructure to do this automatically. So even if a model developer completes all the implementation work, they may have to manually set up all the logging necessary to collect all these statistics. Hopefully, this will become easier as we develop more robust tools, like Epic’s Seismometer.

It would be great to transcend beyond basic monitoring. I imagine a future in which AI tool users can provide real-time performance feedback, and developers can use that feedback to improve models.

Updating

You don’t “set it and forget it” with AI models in healthcare. Models must be maintained as medical knowledge advances and patient populations change. Updating models might involve:

- retraining with new data,

- incorporating feedback from users, or

- Redesigning the model to accommodate new clinical guidelines or technologies.

Ensuring models remain current and relevant involves more than just routine retraining with new datasets. It demands a thoughtful approach, considering how updates might impact the user’s trust and the model’s usability in clinical settings. This challenge is where our recent work on Updating Clinical Risk Stratification Models Using Rank-Based Compatibility comes into play. We developed mathematical techniques to ensure that updated models maintain the correct behavior of previous models that physicians may have come to depend on.

Updating models to maintain or enhance their performance is crucial, especially as new data become available or when data shifts occur. However, these updates must maintain the user’s expectations and the established workflow. Our research introduced a novel rank-based compatibility measure that allows us to evaluate and ensure that the updated model’s rankings align with those of the original model, preserving the clinician’s trust in the AI tool.

Challenges

Implementing AI models into clinical care can be challenging. During model implementation, the goal is to use models to estimate unknown information that can be used to guide various healthcare processes. This real-world usage exposes models to the transient behaviors of the healthcare system. Over time, we expect the model’s performance to change. Even though the model in use may not be changing, the healthcare system is, and these changes in the healthcare system may reflect new patterns that the model was not trained to identify.

Contrasting this with the fact that the model may also change over time is essential.1 Although we often talk about static models (which model developers may update occasionally), it is important to note that some are inherently dynamic. These models change their behavior over time. Employing updating and dynamic models produces a second set of factors impacting how a model’s performance could change over time. Thus, it could be hard to disentangle issues arising from new model behaviors or changes in the healthcare system.

To make things more concrete, here are some examples:

- A model flags patients based on their risk of developing sepsis. There is an increase in the population of patients admitted with respiratory complaints due to a viral pandemic. This change in patient population leads to a massive increase in the number of patients the model flags, and the overall model performance drops because these patients do not end up experiencing sepsis. It serves as an example of a static model being impacted by the changes in the healthcare system over time.

- A model identifies physicians who could benefit from additional training. The model uses a limited set of specially collected information. Model developers create a new model version that utilizes EMR data. After implementation, the updated model identifies physicians with better accuracy. This improvement is an example of a static model being updated to improve performance over time.

Transition from Bench-to-Bedside

Implementation into clinical care requires the model to be connected to systems that can present it with real-time data. We refer to these systems as infrastructure. Infrastructure refers to the systems (primarily IT systems) needed to take data recorded during clinical care operations and present it in a format accessible to ML models. This infrastructure determines the availability, format, and content of information. Although data may be collected in the same source HIT system (e.g., an EMR system), the data may be passed through a different series of extract, transform, and load (ETL) processes (sometimes referred to as pipelines) depending on the data use target.

Once connected to clinical care, ML models need monitoring and updating. For example, developers may want to incorporate knowledge about a new biomarker that changes how a disease is diagnosed and managed. Model developers may thus consider updating models as a part of their regular maintenance.

Physician-AI Teams

This maintenance is complicated because models do not operate in a vacuum. In many application areas, users interact with models and learn about their behavior over time. In safety-critical applications, like healthcare, models and users may function as a team. The user and model each individually assess patients. The decision maker, usually the user, considers both assessments (their own and the model’s) and then makes a decision based on all available information. The performance of this decision is the user-model team performance.

A Note on Deployment vs Integration vs Implementation

As we finish, I want to make a quick note on terminology. We often use the terms implementation, deployment, and integration interchangeably; however, there are subtle but important distinctions between them. I want to bring about a precision in language between these three terms. Clearly defining them will help us discuss connecting AI tools to care processes more effectively.

- Deployment—This one has a heavy-handed vibe; it may conjure up images of a military operation. In the tech realm, it’s about pushing out code or updates from one side (developers) without much say from the other (users). I view it as a one-way street, with the developers calling the shots. However, this mindset doesn’t yield great results in healthcare, where the stakes are high, and workflow and subject matter expertise are paramount. We can deploy code, but we should be wary of deploying workflows. Instead, we should co-develop workflows with all the necessary stakeholders.

- Integration—This is the process of getting an AI model to work with the existing tech stack, like fitting a new piece into a complex puzzle. But just because the piece fits doesn’t mean it will be used effectively or at all. Integration focuses on the technical handshake between systems, but it can miss the bigger picture – workflow needs and human factors.

- Implementation – This is where the magic happens. It’s not just about the technical melding of AI into healthcare systems; it’s about weaving it into the fabric of clinical workflows and practices. It’s a two-way street, a dialogue between developers and end-users (clinicians and sometimes patients). Implementation is a collaborative evolving process that treats users as partners in the socio-technical development of an AI system. It acknowledges that for AI to make a difference, it needs to be embraced and utilized by those on the front lines of patient care.

So, when discussing AI in healthcare, let’s lean more towards implementation. It’s about more than just getting the tech right; it’s about fostering a collaborative ecosystem where we can make tools that genuinely contribute to better health outcomes by meeting the needs of clinical users and workflows.

Wrapping Up

We’ve traversed through technical integration, prospective validation, workflow integration, monitoring, and updating, each with its challenges and nuances. We’ve also untangled some jargon—implementation, deployment, integration—words that might seem interchangeable but have different implications in healthcare AI. Implementation is more than just a technical task; it’s a collaborative endeavor that requires developers and clinicians to collaborate, ensuring AI tools fit into healthcare workflows and genuinely enhance patient care.

This post wraps up our overview of the healthcare AI lifecycle. To understand the technical infrastructure that powers these models, check out the post on Healthcare AI Infrastructure.

Some of this content was adapted from the introductory chapter of my doctoral thesis, Machine Learning for Healthcare: Model Development and Implementation in Longitudinal Settings.

Cheers,

Erkin

Go ÖN Home

-

Herein lies an essential point for clarification with lay audiences. Most people unfamiliar with ML/AI have some expectation that models in use have a default dynamic updating behavior (i.e., that models are learning continuously over time). Dynamic updating hasn’t generally been the case with models deployed in healthcare. ↩