File: 2013-01-01-I-PrACTISE.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “I-PrACTISE” categories:

- Blog

tags:

- medicine

- healthcare

- primary care

- industrial engineering

- human factors engineering

- health systems engineering

excerpt: “Improving Primary Care Through Industrial and Systems Engineering”

Helped to create Improving Primary Care Through Industrial and Systems Engineering (I-PrACTISE) collaborative. I-PrACTISE is an educational and research collaborative focused on connecting problems in Primary Care with solutions from Industrial Engineering.

It is a formal partnership between the University of Wisconsin Department of Industrial and Systems Engineering, and the Departments of Family Medicine and Community Health, Medicine and Pediatrics of the UW School of Medicine and Public Health.

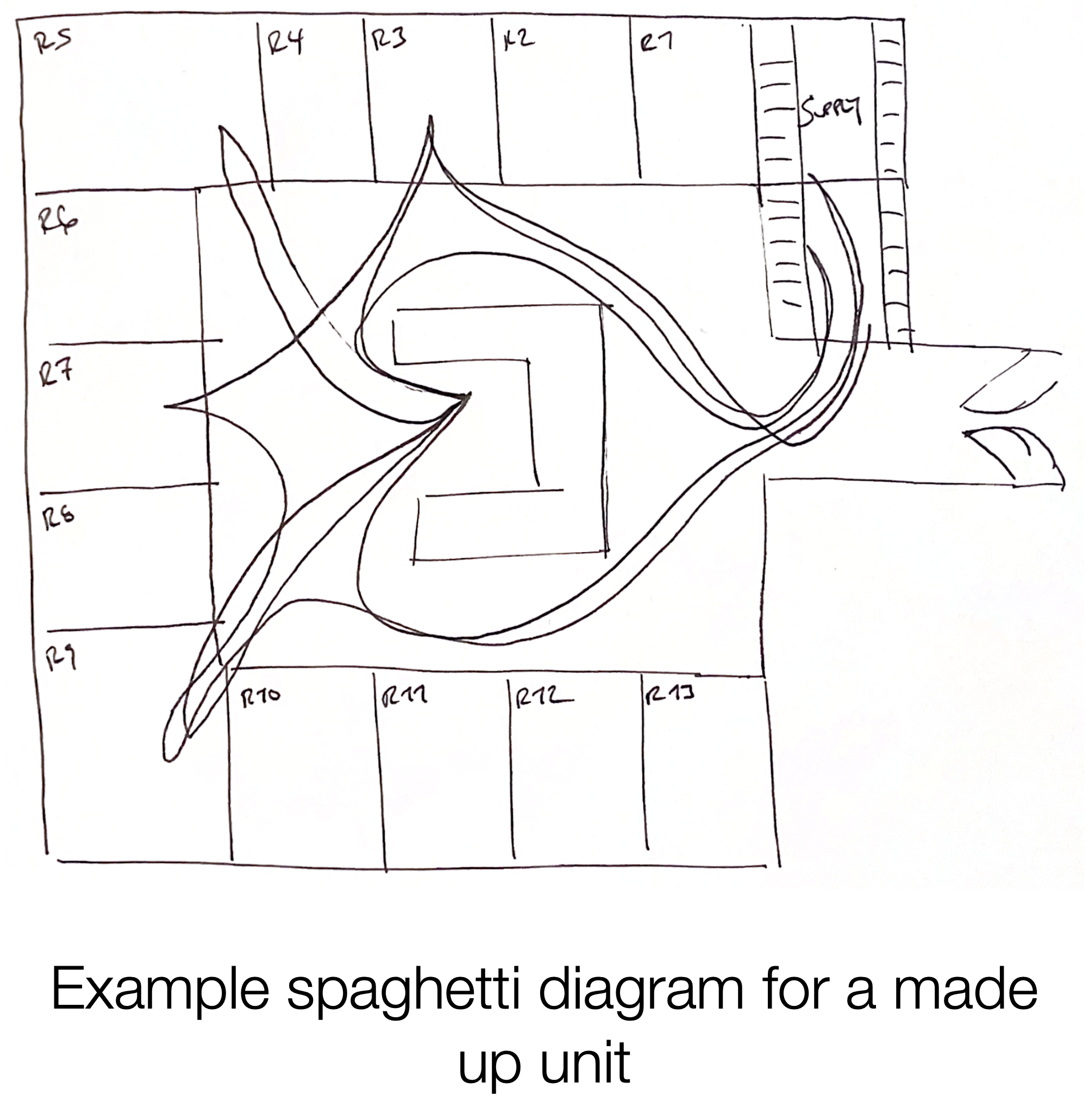

I-PrACTISE focuses on applying industrial engineering methods and systems thinking to primary care healthcare settings, aimed at improving patient outcomes while reducing costs and minimizing waste. By doing so, they seek to address some of the challenges facing modern healthcare delivery, which includes rising healthcare costs, limited resources, and burnout.

The goal of I-PrACTISE is to develop a home for cross-disciplinary research to foster development of innovative solutions that involve re-engineering existing clinical workflows and tools.

Vision

The care of patients will be improved and the practice of primary care medicine will become more efficient through new knowledge and techniques created by the collaboration between Industrial Engineering and the primary care specialties.

Mission

Create a home for scholars and clinicians with interest and expertise in industrial engineering and/or primary care to conduct funded projects directed at improving the quality of primary care for patients, clinicians and staff.

Membership

The membership consists of interested UW Faculty from the School of Medicine and Public Health and the Department of Industrial and Systems Engineering as well as interested scholars from other professions and institutions.

File: 2013-04-01-I-PrACTISE-White-Paper.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “I-PrACTISE White Paper” categories:

- Blog

- Research tags:

- Blog

- Research

- primary care

- medicine

- healthcare

- industrial engineering

- health system engineering

- human factors engineering

- I-PrACTISE excerpt: “Findings from the first I-PrACTISE conference bringing together physicians and engineers.” —

The first Improving PrimAry Care Through Industrial and Systems Engineering (I-PraCTISE) conference was held at Union South at the University of Wisconsin - Madison in April of 2013. It was funded by the Agency for Healthcare Research and Quality and co-sponsored by the UW - Madison Departments of Family Medicine and Industrial and Systems Engineering. A key objective of the first I-PrACTISE conference was to develop a cross-disciplinary research agenda, bringing together engineers and physicians.

I helped to organize themes from across the conference and created this paper to summarize our findings.

Abstract

Primary healthcare is in critical condition with too few students selecting careers, multiple competing demands stressing clinicians, and increasing numbers of elderly patients with multiple health problems. The potential for transdisciplinary research using Industrial and Systems Engineering (ISyE) approaches and methods to study and improve the quality and efficiency of primary care is increasingly recognized. To accelerate the development and application of this research, the National Collaborative to Improve Primary Care through Industrial and Systems Engineering (I-PrACTISE) sponsored an invitational conference in April, 2013 which brought together experts in primary care and ISyE. Seven workgroups were formed, organized around the principles of the Patient Centered Medical Home: Team-Based Care, Coordination and Integration, Health Information Technology (HIT) – Registries and Exchanges, HIT – Clinical Decision Support and Electronic Health Records, Patient Engagement, Access and Scheduling, and Addressing All Health Needs. These groups: (A) Explored critical issues from a primary care perspective and ISyE tools and methods that could address these issues; (B) Generated potential research questions; and (C) Described methods and resources, including other collaborations, needed to conduct this research.

Download paper.———————— File: 2015-01-31-SMS-Website.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Send Me Specials Website” categories:

- Blog

- Project tags:

- software development

- startups

- business

excerpt: “Developed a custom text message gateway for businesses to reach their customers.”

In the days prior to wide smartphone adoption it was hard to find deals on meals and drinks as broke college students on the go.

SMS bottlecap logo

In order to enable restaurants and bars to reach out to college age customers Adam Maus and I created a custom text message gateway integrated with an application and website. These businesses could upload information about their menus and weekly specials and then share them with interested customers by sending out a text message blast.

SMS welcome screen

SMS gateway services existed at the time, but they were very expensive (i.e., you had to pay for each text). To avoid paying per text we got an android smartphone and had it serve as the text message router. We had a webservice that would pass information to an app on the smartphone which would then send text messages using its unlimited data and text plan.

SMS messaging screen

Ultimately, while we were technically successful this project didn’t really go anywhere. We were not addressing a pain point that businesses in Madison were experiencing. Students would have benefited, but they weren’t our “customers”. Cautionary tale on doing good customer discovery and working hard to achieve product-market fit. That’s more important than cool technology.

File: 2015-03-31-SHS-FlexSim.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “2015 FlexSim - SHS ED Modeling Competition” categories:

- Blog

- Project

- Research tags:

- operations research

- healthcare

- emergency medicine

- health system engineering

- simulation excerpt: “Modeling and optimizing the operations of an emergency department using discrete event simulation.” —

I led the University of Wisconsin team to victory in the inaugural FlexSim - SHS Emergency Department Modeling Competition in 2015. This international competition was sponsored by Flexsim Healthcare and took place at the 2015 Orlando Society for Health Systems conference. The team consisted of Samuel Schmitt, April Sell, Michael Russo and myself. We were advised by Dr. Brian Patterson and Dr. Laura Albert.

This case competition involved optimizing the operations of an emergency department (ED) using discrete event simulation and operations research tools. The goal was to analyze the Susquehanna Health ED’s current operations and determine the best care delivery model to meet productivity requirements while satisfying staffing and care constraints.

We used a combination of discrete event simulation (FlexSim healthcare software), design of experiments, and mathematical programming to determine the ideal care delivery model. See below for a copy of our winning presentation.

Executive Summary

Susquehanna Health, a four‐hospital, not‐for‐profit health system, has deployed an Emergency Department (ED) Leadership Team to reduce expenses and optimize operations at their flagship hospital, Williamsport Regional Medical Center (WRMC). The Emergency Department has been experiencing pressure from a recently enacted marketing campaign that ensures patients are seen by a provider in 30 minutes or less at two competitor hospitals in the region. This campaign concerns Susquehanna Health because their current average door to provider time is 42.7 minutes with peak times as long as 140 minutes. As a result, 2.8% of their patients are leaving without being seen.

The Susquehanna Health System needs to be competitive in order to face today’s healthcare trends of declining reimbursement, increasingly high debt, and greater focus on outpatient services. The Emergency Department Leadership Team reached out to UW‐Madison’s Industrial & Systems Engineering students to assist them in creating a simulation that will help them improve patient safety, staff productivity, and overall efficiency.

The UW‐Madison Industrial & Systems Engineering students developed a discrete‐event simulation of WRMC Emergency Department’s traditional triage and bed process using FlexSim HC simulation software. Input data consisted of processing time distributions and probabilities supplied from the Emergency Department Leadership Team. To enhance the accuracy of the model, the team also collaborated with physicians at the University of Wisconsin Hospitals and Clinics (UWHC) to gather information on average processing times. Based on best practices in other institutions, simulation models were created to represent the two additional delivery methods: PITT and PITT/Super Fast Track.

After the modeling process was completed the team ran a series of experiments to determine the optimal delivery method and staffing levels. Super Fast Track appeared to be the best delivery system, however the team recommends that this analysis be redone on a more powerful machine. The machine used for modeling was not powerful enough to run the simulation experiments needed for statistical certainty.

The team views this as the first phase of a longer term project. The team will continue to refine the model and run new experiments once a new machine is procured. Collaborators at the UW – Madison, School of Medicine and Public Health, have asked the team to build a second set of models to be used for the UW Health ED.

Download paper.

File: 2015-10-12-Optimizing-the-ER.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Wisconsin Engineer: Optimizing the ER” categories:

- Blog

- Press tags:

- Blog

- Press excerpt: “Article discussing Flexsim-SHS competition win.” —

April Sell, Samuel Schmitt, and I discussed our win at the Flexsim-SHS Emergency Department Modeling Competition with Kelsey Murphy for an article in the Wisconsin Engineer magazine.

File: 2015-10-31-Predicting-ED-Patient-Throughput.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Predicting ED Patient Throughput Times Utilizing Machine Learning” categories:

- Blog

- Research tags:

- Blog

- Research

- emergency medicine

- medicine

- healthcare

- health system engineering

- artificial intelligence

- machine learning

- operations research excerpt: “Machine learning for emergency department throughput prediction.” —

Annals of Emergency Medicine research forum abstract. Work done in conjunction with Dr. Brian Patterson and Dr. Laura Albert. Link to paper.

Abstract

Study Objectives

Patient throughput time in the emergency department is a critical metric affecting patient satisfaction and service efficiency. We performed a retrospective analysis of electronic medical record (EMR) derived data to evaluate the effectiveness of multiple modeling techniques in predicting throughput times for patient encounters in an academic emergency department (ED). Analysis was conducted using various modeling techniques and on differing amounts of information about each patient encounter. We hypothesized that more comprehensive and inclusive models would provide greater predictive power.

Methods

Retrospective medical record review was performed on consecutive patients at a single, academic, university-based ED. Data were extracted from an EMR derived dataset. All patients who presented from January 1, 2011 to December 31, 2013 and met inclusion criteria were included in the analysis. The data were then partitioned into two sets: one for developing models (training) and a second for analyzing the predictive power of these models (testing). The Table lists model types used. The primary outcome measured was the ability of the trained models to accurately predict the throughput times of test data, measured in terms of mean absolute error (MAE). Secondary outcomes were R2 and mean squared error (MSE). Model factors included a mix of patient specific factors such as triage vital signs, age, chief complaint; factors representing the state of the ED such as census and running average throughput time; and timing factors such as time of day, day of week, and month. The most comprehensive models included a total of 29 distinct factors.

Results

Of the 134,194 patients that were seen in the 3-year period of the study 128,252 met the inclusion criteria; the mean throughput time was 183.327 min (SD 1⁄4 98.447 min). Compared to using a single average throughput time as a naïve model (MAE 1⁄4 80.801 min), univariate models provided improved predictive abilities. More sophisticated models, using machine learning methods and including all available factors provided greater predictive power with the lowest MAE achieved at 73.184 min.

Conclusion

We have demonstrated that including information about incoming patients and the state of the ED at the time of an arrival can aid in the prediction of individual patients’ throughput times. The Multiple Linear Regression model, including all available factors, had the highest predictive accuracy, reducing mean absolute error by over 9% compared to the naïve model. While this represents an improvement in the current state of the art, we believe there is room for further work to generate high quality individual patient predictions. More sophisticated models based on ED workflows may lead to greater predictive power to prospectively estimate patient throughput times at arrival.

Download paper. ———————— File: 2015-12-31-Arena-Simulation-Modeling-Course.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Arena Simulation Modeling Course” categories:

- Blog

- Project

- Teaching tags:

- operations research

- simulation

- industrial engineering

- teaching

- Arena excerpt: “Discrete event simulation and operations modeling for undergrads.” —

I developed an online course to introduce the Arena simulation application. Arena is a discrete event simulation tool that is widely used throughout the field of industrial engineering. Despite its frequent use and inclusion in undergraduate curicula it is often not well understood by students. This is due to a lack of high quality training materials.

I taught an in-person simulation lab (ISyE 321) and assisted in teaching a theory of simulation course (ISyE 320) with Dr. Laura Albert in 2015 at the University of Wisconsin. During this time I developed a series of modules to show off the functionality of Arena. I subsequently recorded these modules and developed a free online course that is on youtube.

Here’s the first video in the online Arena course that I developed:

I also developed accompanying presentation slides, exercises, and Arena files. If you are interested in accessing these materials please contact me.

File: 2016-02-13-Cherry-Picking.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Cherry Picking Patients: Examining the Interval Between Patient Rooming and Resident Self-assignment” categories:

- Blog

- Research tags:

- Blog

- Research

- machine learning

- artificial intelligence

- healthcare

- medicine

- electronic health record excerpt: “Investigating the impact of chief complaints on resident physician self-assignment times in emergency departments.” —

Study titled “Cherry Picking Patients: Examining the Interval Between Patient Rooming and Resident Self-assignment”. We aimed to evaluate the association between patient chief complaint and the time interval between patient rooming and resident physician self-assignment (“pickup time”). The team hypothesized that significant variation in pickup time would exist based on chief complaint, thereby uncovering resident preferences in patient presentations.[^1]

The authorship team consisted of Brian W. Patterson MD, MPH, Robert J. Batt PhD, Morgan D. Wilbanks MD, myself, Mary C. Westergaard MD, and Manish N. Shah MD, MPH.

Abstract

Objective

We aimed to evaluate the association between patient chief complaint and the time interval between patient rooming and resident physician self-assignment (“pickup time”). We hypothesized that significant variation in pickup time would exist based on chief complaint, thereby uncovering resident preferences in patient presentations.

Methods

A retrospective medical record review was performed on consecutive patients at a single, academic, university-based emergency department with over 50,000 visits per year. All patients who presented from August 1, 2012, to July 31, 2013, and were initially seen by a resident were included in the analysis. Patients were excluded if not seen primarily by a resident or if registered with a chief complaint associated with trauma team activation. Data were abstracted from the electronic health record (EHR). The outcome measured was “pickup time,” defined as the time interval between room assignment and resident self-assignment. We examined all complaints with >100 visits, with the remaining complaints included in the model in an “other” category. A proportional hazards model was created to control for the following prespecified demographic and clinical factors: age, race, sex, arrival mode, admission vital signs, Emergency Severity Index code, waiting room time before rooming, and waiting room census at time of rooming.

Results

Of the 30,382 patients eligible for the study, the median time to pickup was 6 minutes (interquartile range = 2–15 minutes). After controlling for the above factors, we found systematic and significant variation in the pickup time by chief complaint, with the longest times for patients with complaints of abdominal problems, numbness/tingling, and vaginal bleeding and shortest times for patients with ankle injury, allergic reaction, and wrist injury.

Conclusions

A consistent variation in resident pickup time exists for common chief complaints. We suspect that this reflects residents preferentially choosing patients with simpler workups and less perceived diagnostic ambiguity. This work introduces pickup time as a metric that may be useful in the future to uncover and address potential physician bias. Further work is necessary to establish whether practice patterns in this study are carried beyond residency and persist among attendings in the community and how these patterns are shaped by the information presented via the EHR.

Full Text

Bibliography

[^1]: Patterson, B. W., Batt, R. J., Wilbanks, M. D., Otles, E., Westergaard, M. C., & Shah, M. N. (2018). Cherry Picking Patients: Examining the Interval Between Patient Rooming and Resident Self-assignment. Academic Emergency Medicine, 25(7), 742-751.

File: 2016-05-31-Forecasting-ED-Patient-Admissions.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Forecasting ED Patient Admissions Utilizing ML” categories:

- Blog

- Research tags:

- Blog

- Research

- emergency medicine

- medicine

- healthcare

- health system engineering

- artificial intelligence

- machine learning

- operations research excerpt: “Using machine learning to predict the likelihood of inpatient admission at the time of patient triage in emergency departments.” —

“Forecasting Emergency Department Patient Admissions Utilizing Machine Learning” was a clinical abstract submitted to Academic Emergency Medicine. In this study, we aimed to predict the need for admission at the time of patient triage utilizing data already available in the electronic health record (EHR). We performed a retrospective analysis of EHR-derived data to evaluate the effectiveness of machine learning techniques in predicting the likelihood of admission for patient encounters in an academic emergency department. We hypothesized that more comprehensive & inclusive models would provide greater predictive power.

This work was done in conjunction with Dr. Brian Patterson, Dr. Jillian Gorski, and Dr. Laura Albert.

Abstract

Background

Multiple studies have identified inpatient bed availability as a key metric for Emergency Department operational performance. Early planning for patient admissions may allow for optimization of hospital resources.

Objectives

Our study aimed to predict the need for admission at the time of patient triage utilizing data already available in the electronic health record (EHR). We performed a retrospective analysis of EHR derived data to evaluate the effectiveness of machine learning techniques in predicting the likelihood of admission for patient encounters in an academic emergency department. We hypothesized that more comprehensive & inclusive models would provide greater predictive power.

Methods

All patients who presented from 1/1/2012 to 12/31/2013 and met inclusion criteria were included in the analysis. The data were then partitioned into two sets for training and testing. The primary outcome measured was the ability of the trained models to discern the future admission status of an encounter, measured in terms of area under the receiver operator curve (ROC AUC). A secondary outcome was accuracy (ACC). Model features included a mix of patient specific factors (demographics, triage vital signs, visit and chief complaint history), the state of the ED (census and other performance metrics); and timing factors (time of day, etc.). The most comprehensive models included 682 variables, encoding 328 features, aggregated into 3 feature groups.

Results

Our final analysis included 91,060 patient encounters. 28,838 (31.7%) of these encounters resulted in an inpatient admission. Compared to using a naïve model, single feature group models provided improved predictive abilities (1.8% - 50.8% improvement in ROC AUC), see figure for details. More sophisticated models, including all available feature groups provided greater predictive power with the greatest achieved at ROC AUC score of 0.756.

Conclusion

We have demonstrated that including information about incoming patients and the state of the ED at the time of triage can aid in the prediction of individual patients’ likelihood of admission. More sophisticated models using claims, weather, and social media data may lead to greater predictive power to prospectively estimate patient admission likelihood at arrival.

Full Text

Download paper.

File: 2016-06-31-I-PrACTISE-Colloquia-Primary-Care-Predictive-Analytics.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “I-PrACTISE Colloquium Primary Care & Predictive Analytics” categories:

- Blog

- Talk tags:

- primary care

- medicine

- healthcare

- machine learning

- artificial intelligence

- operations research

- industrial engineering excerpt: “Exploring the potential benefits and challenges of integrating ML into primary care.” —

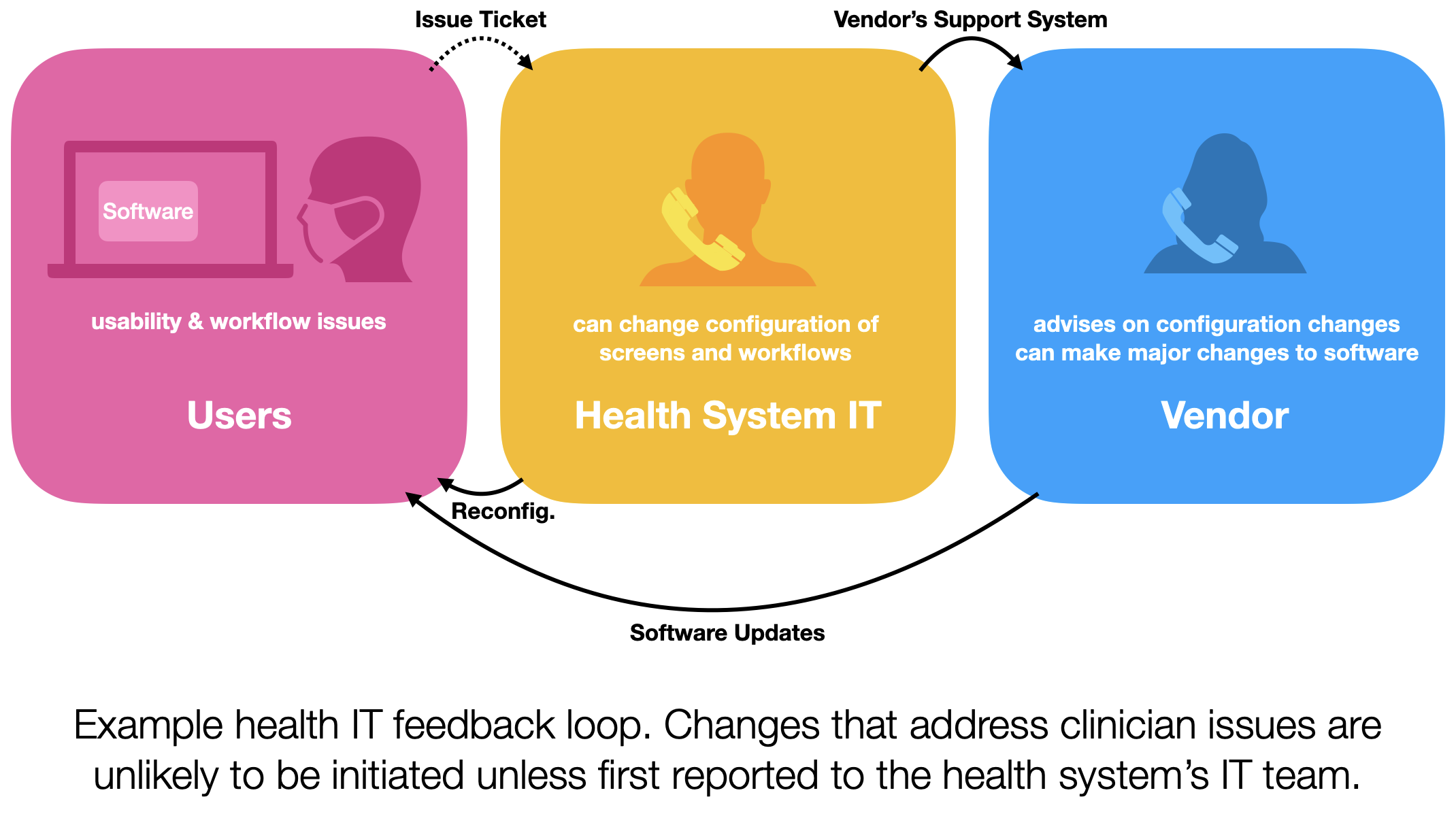

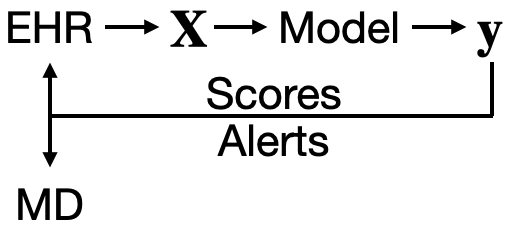

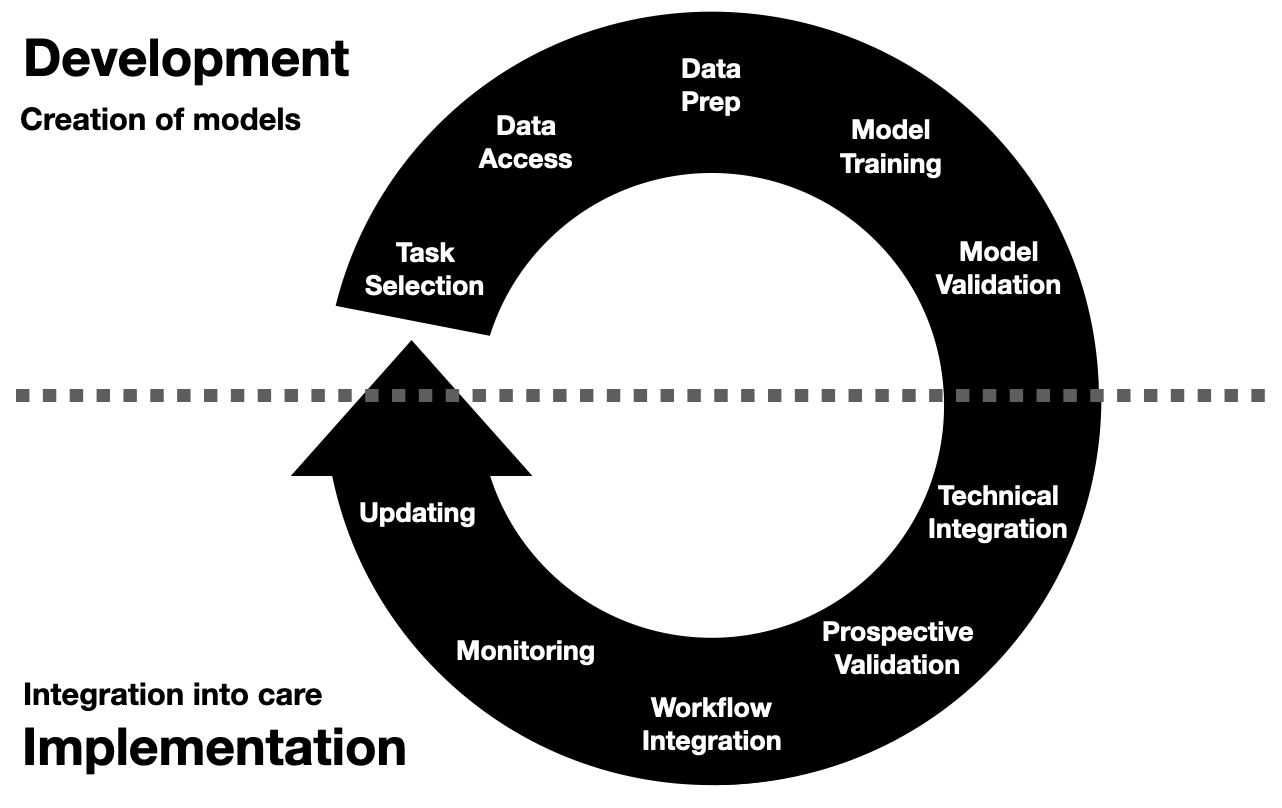

I had the opportunity to give a talk titled “Primary Care & Predictive Analytics” as a part of the I-PrACTISE colloquia series. We discussed artificial intelligence/machine learning and their applications in medicine, with a particular focus on primary care. In the presentation, I aimed to demystify machine learning, discuss its potential benefits in healthcare, and address the challenges associated with implementing these cutting-edge techniques.

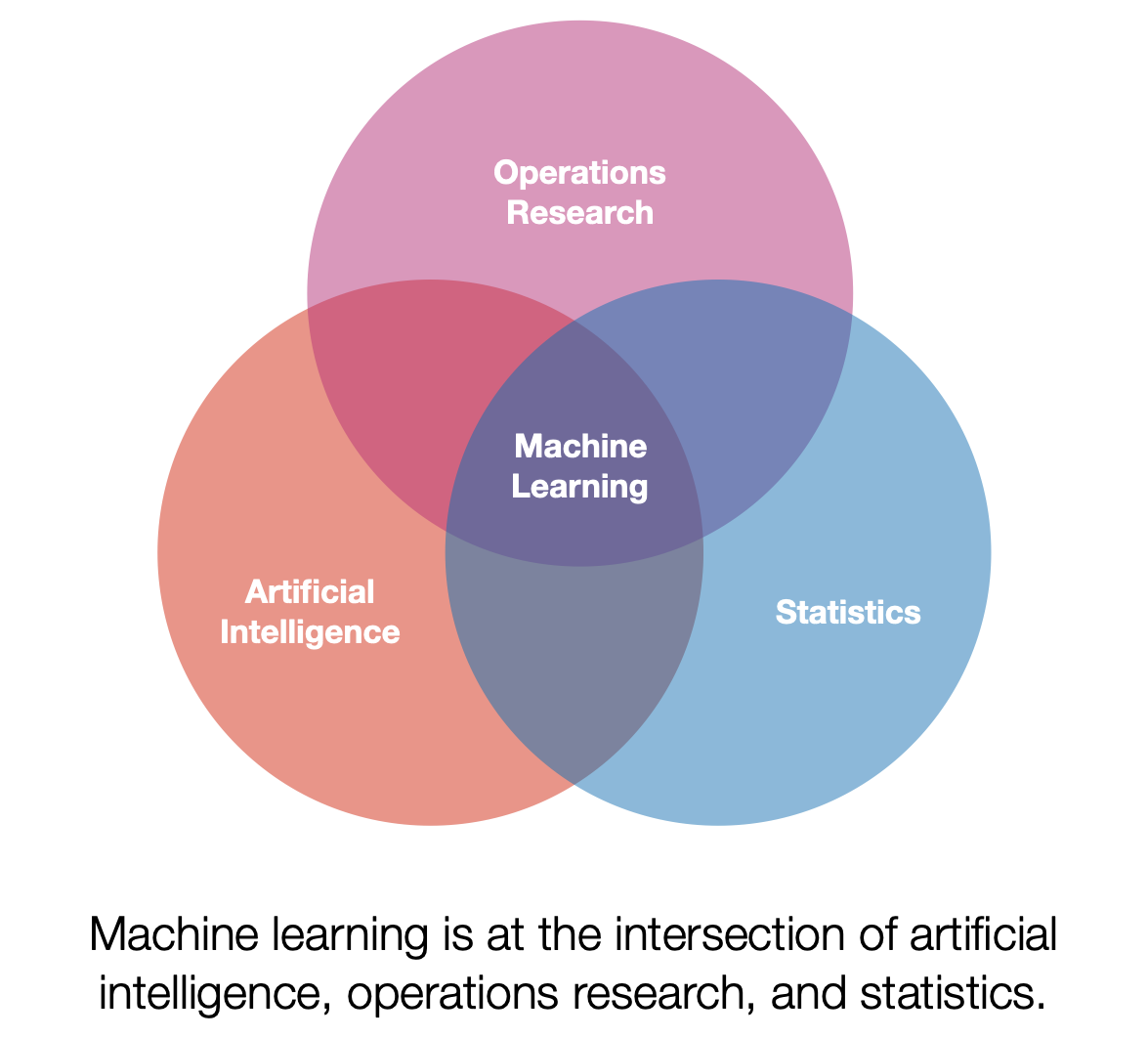

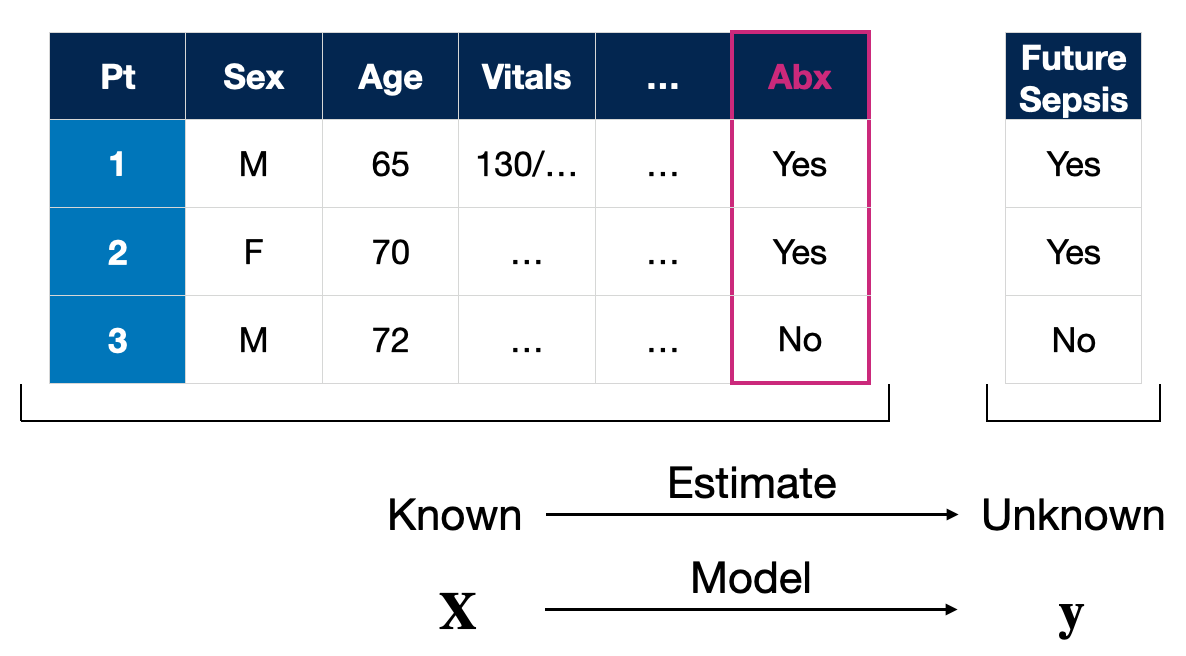

What is Machine Learning?

Machine learning is a discipline that explores the construction and study of algorithms that can learn from data. These algorithms improve their performance at specific tasks as they gain experience, which is often measured in terms of data. In my talk, I explained the concept of machine learning by drawing parallels between training an algorithm and training an undergraduate. Just as we teach undergraduates general concepts and facts that they then synthesize and apply to specific situations, we train algorithms using data to improve their performance at a given task.

Applications in Medicine and Primary Care

Machine learning has the potential to revolutionize the field of medicine, and primary care is no exception. By leveraging vast amounts of data, we can train algorithms to predict patient outcomes, diagnose conditions more accurately, and identify potential treatment options. For example, we could use machine learning to analyze tumor samples and train a model to evaluate new samples, helping doctors make more informed decisions about cancer diagnosis and treatment.

Challenges and Considerations

Despite its potential, there are several challenges to integrating machine learning into healthcare, particularly in sensitive areas like primary care. One of the key issues I addressed in my talk is the need for collaboration between engineers, computer scientists, statisticians, and healthcare professionals to ensure that these advanced techniques are applied responsibly and effectively.

Additionally, it is crucial to consider the human factors involved in implementing machine learning in healthcare settings. Understanding how healthcare providers interact with and use these algorithms is essential to ensuring their successful integration into medical practice.

Looking Ahead

As we continue to explore the potential of machine learning in primary care and the broader medical field, it is vital to remain focused on responsible development and implementation. By collaborating across disciplines and considering the human factors involved, we can work towards harnessing the power of machine learning to improve patient outcomes and revolutionize healthcare.

Video

File: 2016-07-01-Metastar-Community-Pharmacy_Initiative.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “A community pharmacy initiative to decrease hospital readmissions by increasing patient adherence and competency of therapy” categories:

- Blog

- Research tags:

- Blog

- Research

- medicine

- healthcare

- industrial engineering

- health system engineering

- data science

- quality improvement excerpt: “Pharmacy based interventions may help to reduce hospital admissions.” —

While working as the lead data scientist at MetaStar I helped to analyze the impact of a community pharmacy based intervention to reduce the rate of hospital admissions and readmissions. Patients enrolled in the intervention had the community pharamcy deliver medications to the homes of patients and educate them as well. We found that enrolling patients in the program reduced their rate of admissions.

Abstract

Background

Direct pharmacist care has been associated with substantial reduction in hospital admission and readmission rates and other positive outcomes, as compared with the absence of such care.

Objective

To decrease readmissions for community pharmacy patients through a program of improved medication packaging, delivery and patient education.

Design

Comparison of the number of admissions and readmissions for each patient enrolled in the program, comparing the time elapsed since enrollment with the equivalent period prior to enrollment.

Setting

A community pharmacy in Kenosha, Wisconsin.

Patients

Medicare beneficiaries served by the community pharmacy conducting the intervention. This includes 263 patients, 167 of which are Medicare beneficiaries, who have been placed in the intervention group as of June 2016.

Intervention

A voluntary program to package medications according to patient-specific characteristics and physician orders, to deliver medication to patients’ homes, and to educate and follow up with patients regarding problems with adherence.

Measurements

Hospital admissions and readmissions post-enrollment as compared with the equivalent pre-enrollment period.

Results

An analysis that limits the study period to a year centered on the patient’s enrollment date in the PACT intervention found a highly statistically significant (p < 0.01) reduction in admissions. An analysis that included the entire duration of the patient’s enrollment in PACT also found a statistically significant (p < 0.001) reduction in admissions. However, neither analytic technique found a statistically significant reduction in readmissions (p=0.2 and 0.1 respectively).

Limitations

That the study was unable to show a decrease in readmissions to accompany the decrease in admissions may be due to the success of the intervention in decreasing the denominator as well as the numerator of the readmissions measure. In addition, the study has not stratified for changes in the intervention over time, and for differences in patient characteristics or outcomes other than admissions and readmissions.

Full Text

File: 2016-08-01-INFORMS-in-the-News.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Quoted in INFORMS in the News” categories:

- Blog

- Press tags:

- Blog

- Press excerpt: “Starting a new INFORMS student chapter.” —

Over the course of the 2015-2016 school year I worked with several other students to start a student chapter of INFORMS at UW - Madison. After putting together bylaws and dealing with red tape we got the new student organization started. Additionally, was quoted in INFORMS in the News regarding setting up the University of Wisconsin student INFORMS chapter.

File: 2016-09-19-Impact-of-ED-Census-on-Admission.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “The Impact of ED Census on the Decision to Admit” categories:

- Blog

- Research tags:

- Blog

- Research

- machine learning

- artificial intelligence

- healthcare

- medicine

- electronic health record

- operations research

- health system engineering excerpt: “Emergency Department disposition decisions are influenced not only by objective measures of a patient’s disease state, but also by census.” —

Academic Emergency Medicine paper studying the impact of ED census on admission decisions: The Impact of Emergency Department Census on the Decision to Admit.

Jillian K. Gorski, Robert J. Batt, PhD, myself, Manish N. Shah, MD MPH, Azita G. Hamedani MD, MPH, MBA, and Brian W. Patterson MD, MPH, studied the impact of emergency department (ED) census on disposition decisions made by ED physicians. Our findings reveal that disposition decisions in the ED are not solely influenced by objective measures of a patient’s condition, but are also affected by workflow-related concerns.

The retrospective analysis involved 18 months of all adult patient encounters in the main ED at an academic tertiary care center. The results demonstrated that both waiting room census and physician load census were significantly associated with an increased likelihood of patient admission. This highlights the need to consider workflow-related factors when making disposition decisions, in order to ensure optimal patient care and resource allocation in emergency departments.

Abstract

Objective

We evaluated the effect of emergency department (ED) census on disposition decisions made by ED physicians.

Methods

We performed a retrospective analysis using 18 months of all adult patient encounters seen in the main ED at an academic tertiary care center. Patient census information was calculated at the time of physician assignment for each individual patient and included the number of patients in the waiting room (waiting room census) and number of patients being managed by the patient’s attending (physician load census). A multiple logistic regression model was created to assess the association between these census variables and the disposition decision, controlling for potential confounders including Emergency Severity Index acuity, patient demographics, arrival hour, arrival mode, and chief complaint.

Results

A total of 49,487 patient visits were included in this analysis, of whom 37% were admitted to the hospital. Both census measures were significantly associated with increased chance of admission; the odds ratio (OR) per patient increase for waiting room census was 1.011 (95% confidence interval [CI] = 1.001 to 1.020), and the OR for physician load census was 1.010 (95% CI = 1.002 to 1.019). To put this in practical terms, this translated to a modeled rise from 35.3% to 40.1% when shifting from an empty waiting room and zero patient load to a 12-patient wait and 16-patient load for a given physician.

Conclusion

Waiting room census and physician load census at time of physician assignment were positively associated with the likelihood that a patient would be admitted, controlling for potential confounders. Our data suggest that disposition decisions in the ED are influenced not only by objective measures of a patient’s disease state, but also by workflow-related concerns.

Full Text

File: 2016-10-01-Cues-for-PE-diagnosis-in-the-ED.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Cues for PE Diagnosis in the Emergency Department: A Sociotechnical Systems Approach for Clinical Decision Support” categories:

- Blog

- Research tags:

- Blog

- Research

- emergency medicine

- medicine

- healthcare

- health system engineering

- human factors engineering excerpt: “American Medical Informatics Association Annual Symposium abstract focused on pulmonary embolism diagnosis.” —

American Medical Informatics Association Annual Symposium abstract. Work done in conjunction with Dr. Brian Patterson, MD MPH, Ann Schoofs Hundt, MS, Peter Hoonakker, PhD, and Pascale Carayon, PhD.

Pulmonary embolism (PE) diagnosis presents a significant challenge for emergency department (ED) physicians, as both missed or delayed diagnosis and overtesting can have serious consequences for patients. The implementation of health information technology, such as clinical decision support systems, has the potential to mitigate diagnostic errors and enhance the overall diagnostic process. However, to achieve this, the technology must be practical, user-friendly, and seamlessly integrate into clinical workflows. This calls for a sociotechnical systems approach to understand the cues involved in the PE diagnosis process and how they relate to the information available in electronic health records (EHRs).

In this study, we sought to comprehend the cues in the PE diagnosis process within the ED sociotechnical system and compare them to the information found in the EHR. The objective was to establish design requirements for clinical decision support for PE diagnosis in the ED.

Abstract

Pulmonary embolus (PE) is among the most challenging diagnoses made in the emergency department (ED). While missed or delayed diagnosis of PE is a major problem in the ED1, overtesting, which subjects patients to harm from radiation, overdiagnosis, and increased cost, is also a concern. Health information technology, such as clinical decision support, has the potential to reduce diagnostic errors and support the diagnostic process. However, this requires that the technology be useful and usable, and fit within the clinical workflow, providing justification for a sociotechnical systems approach. The purpose of this study is to understand cues in the PE diagnosis process in the ED sociotechnical system and to compare these cues to the information available in the EHR. This will help in defining design requirements for a clinical decision support for PE diagnosis in the ED. Using the Critical Decision Method, we interviewed 16 attending physicians and residents in three EDs of two academic medical centers and one community hospital. The total duration of the interviews was over 12 hours. Using an iterative qualitative content analysis, we identified 4 categories of cues: (1) patient signs and symptoms (e.g., leg swelling, chest pain), (2) patient risk factors (e.g., immobilization, surgery or trauma, cancer), (3) explicit risk scoring (e.g., PERC), and (4) clinical judgment. We then mapped these cues to information available in the EHR at one of the participating hospitals. About 80-90% of the cues may be available in the EHR; many of them rely on the physical exam and information obtained by talking to the patient. This finding underlines the need to identify the various roles involved in obtaining, documenting and reviewing the information that informs the PE diagnostic process. The PE diagnostic process in the ED is distributed across multiple roles, individuals and technologies in a sometimes chaotic and often busy physical and organizational environment.

Full Text

File: 2016-12-13-WHO-human-factors.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “WHO Technical Series on Safer Primary Care: Human Factors” categories:

- Blog

- Research tags:

- Blog

- Research

- primary care

- medicine

- healthcare

- industrial engineering

- health system engineering

- human factors engineering

- I-PrACTISE excerpt: “Human factors engineering can play an important role in improving the safety of primary care.” —

Tosha Wetterneck, MD MS, Richard Holden, PhD, John Beasley, MD, and myself wrote a technical chapter for the World Health Organization. Link to technical chapter.

Its part of the World Health Organization’s technical series on safer primary care, and has a particular focus on human factors. This report highlights the crucial role that human factors play in ensuring patient safety, improving the quality of care, and optimizing the overall efficiency of primary care systems. By understanding the interaction between humans, systems, and technologies, healthcare organizations can implement more effective strategies to reduce errors, enhance communication, and ultimately improve patient outcomes.

This monograph describes what “human factors” are and what relevance this approach has for improving safety in primary care. This section defines human factors. The next sections outline some of the key human factors’ issues in primary care and the final sections explore potential practical solutions for safer primary care.

Full Text

Download technical chapter. ———————— File: 2017-12-31-M-is-for-Medicine.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “M is for Medicine” categories:

- Blog

- Project tags:

- iOS

- mobile development

- software development

-

Apple

I developed an an iMessage Sticker Pack for all those interested in medicine, health, and the human body. Download it from the Apple AppSore.

File: 2018-01-18-Immune-Genomic-Expression-Correlates-Outcomes-in-Trauma-Patients.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Immune Genomic Expression Correlates with Discharge Location and Poor Outcomes in Trauma Patients” categories:

- Blog

- Research tags:

- Blog

- Research

- trauma surgery

- genomics

- medicine

-

healthcare

Academic Surgical Congress abstract, can be found here.

Download abstract.

File: 2019-05-20-AAFP-Innovation-Fellow.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “AAFP’s Innovation Fellow Studies Tech, Digital Scribes” categories:

- Blog

- Press tags:

- Blog

-

Press

Discussed my work studying digital scribes with David Mitchell. Read the interview.

File: 2019-08-10-RTW-after-injury-sequential-prediction-and-decision.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Return to Work After Injury: A Sequential Prediction & Decision Problem” categories:

- Blog

- Research tags:

- Blog

- Research

- occupational health

- return to work

- medicine

- healthcare

- artificial intelligence

-

machine learning

Machine Learning for Healthcare Conference clinical abstract, can be found here.

Download abstract.

File: 2020-04-20-COVID-Staffing-Project.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “COVID Staffing Project: Three Medical Students’ Contributions” categories:

- Blog

- Press tags:

- Blog

-

Press

Kenneth Abbott, Alexandra Highet and I catalogued our contributions to the COVID Staffing project in a Dose of Reality Blog Post.

File: 2020-04-22-COVID-19-Visualization.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “COVID-19 Analysis” categories:

- Blog

- Project tags:

- data science

-

covid

Quick exploration of case spread and mortality rates of the novel coronavirus.

Tableau embed code courtesy of San Wang.

File: 2020-05-12-Faster-than-COVID.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Faster than COVID: a computer model that predicts the disease’s next move” categories:

- Blog

- Press tags:

- Blog

- Press

- covid

- artificial intelligence

- machine learning

-

early warning system

Michigan Engineering News covered our work on the M-CURES COVID deterioration model that I helped to develop and led the implementation of. Read the article here.

File: 2020-05-29-AADL-Friday-Night-AI.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Ann Arbor District Library - Friday Night AI: AI and COVID-19” categories:

- Blog

- Talk tags:

- medicine

- healthcare

- covid

- machine learning

- artificial intelligence

-

operations research

Virtual panel discussion on how artificial intelligence could guide the response to the coronavirus outbreak. Hosted by the Ann Arbor District Library. Panel included speakers from across the Michigan AI and Michigan Medicine.

File: 2020-05-30-its-time-to-bring-human-factors-to-primary-care.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “It’s time to bring human factors to primary care policy and practice” categories:

- Blog

- Research tags:

- Blog

- Research

- primary care

- medicine

- healthcare

- industrial engineering

- health system engineering

- human factors engineering

-

I-PrACTISE

Appeared in Applied Ergonomics. Link

Abstract

Primary health care is a complex, highly personal, and non-linear process. Care is often sub-optimal and professional burnout is high. Interventions intended to improve the situation have largely failed. This is due to a lack of a deep understanding of primary health care. Human Factors approaches and methods will aid in understanding the cognitive, social and technical needs of these specialties, and in designing and testing proposed innovations. In 2012, Ben-Tzion Karsh, Ph.D., conceived a transdisciplinary conference to frame the opportunities for research human factors and industrial engineering in primary care. In 2013, this conference brought together experts in primary care and human factors to outline areas where human factors methods can be applied. The results of this expert consensus panel highlighted four major research areas: Cognitive and social needs, patient engagement, care of community, and integration of care. Work in these areas can inform the design, implementation, and evaluation of innovations in Primary Care. We provide descriptions of these research areas, highlight examples and give suggestions for future research. ———————— File: 2020-09-23-UM-Precision-Health-Symposium.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “UMich Precision Health Symposium: Prediction & Prevention - Powering Precision Health” categories:

- Blog

- Talk tags:

- medicine

- healthcare

- precision health

- machine learning

- artificial intelligence

-

operations research

Virtual panel discussion on precison health. A video segment from the 2020 University of Michigan Precision Health Virtual Symposium.

File: 2020-11-13-UM-Precision-Health-Onboarding-Session.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “UMich Precision Health Onboarding Session: Precision Health De-Identified RDW” categories:

- Blog

- Talk tags:

- medicine

- healthcare

- precision health

- machine learning

- artificial intelligence

-

operations research

Precision Health Data Analytics & IT workgroup held an onboarding session for Engineering students who could use Precision Health tools and resources for their classes and research. I provided a technical demonstration on how to find and query the database through the sql server.

File: 2021-05-19-UMich-MSTP-Promo-Video.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “UMich MSTP Promo Video” categories:

- Blog

- Press tags:

- Blog

- Press

- medicine

-

MSTP

Was featured in the University of Michigan Medical Scientist Training Program recruiting video.

The MSTP at Michigan prepares physician scientists for careers in academic medicine with a focus on biomedical research. More than just an M.D. and Ph.D. spliced together, our program offers comprehensive support and guidance, integrating academic excellence and flexibility to help you reach your career goals.

File: 2021-07-21-External-Validation-of-a-Widely-Implemented-Proprietary-Sepsis-Prediction-Model-in-Hospitalized-Patients.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “External Validation of a Widely Implemented Proprietary Sepsis Prediction Model in Hospitalized Patients” categories:

- Blog

- Research tags:

- Blog

- Research

- sepsis

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

-

machine learning

JAMA Internal Medicine. Can be found here.

Key Points

Question

How accurately does the Epic Sepsis Model, a proprietary sepsis prediction model implemented at hundreds of US hospitals, predict the onset of sepsis?

Findings

In this cohort study of 27 697 patients undergoing 38 455 hospitalizations, sepsis occurred in 7% of the hosptalizations. The Epic Sepsis Model predicted the onset of sepsis with an area under the curve of 0.63, which is substantially worse than the performance reported by its developer.

Meaning

This study suggests that the Epic Sepsis Model poorly predicts sepsis; its widespread adoption despite poor performance raises fundamental concerns about sepsis management on a national level.

Abstract

Importance

The Epic Sepsis Model (ESM), a proprietary sepsis prediction model, is implemented at hundreds of US hospitals. The ESM’s ability to identify patients with sepsis has not been adequately evaluated despite widespread use.

Objective

To externally validate the ESM in the prediction of sepsis and evaluate its potential clinical value compared with usual care.

Design, Setting, and Participants

This retrospective cohort study was conducted among 27 697 patients aged 18 years or older admitted to Michigan Medicine, the academic health system of the University of Michigan, Ann Arbor, with 38 455 hospitalizations between December 6, 2018, and October 20, 2019.

Exposure

The ESM score, calculated every 15 minutes.

Main Outcomes and Measures

Sepsis, as defined by a composite of (1) the Centers for Disease Control and Prevention surveillance criteria and (2) International Statistical Classification of Diseases and Related Health Problems, Tenth Revision diagnostic codes accompanied by 2 systemic inflammatory response syndrome criteria and 1 organ dysfunction criterion within 6 hours of one another. Model discrimination was assessed using the area under the receiver operating characteristic curve at the hospitalization level and with prediction horizons of 4, 8, 12, and 24 hours. Model calibration was evaluated with calibration plots. The potential clinical benefit associated with the ESM was assessed by evaluating the added benefit of the ESM score compared with contemporary clinical practice (based on timely administration of antibiotics). Alert fatigue was evaluated by comparing the clinical value of different alerting strategies.

Results

We identified 27 697 patients who had 38 455 hospitalizations (21 904 women [57%]; median age, 56 years [interquartile range, 35-69 years]) meeting inclusion criteria, of whom sepsis occurred in 2552 (7%). The ESM had a hospitalization-level area under the receiver operating characteristic curve of 0.63 (95% CI, 0.62-0.64). The ESM identified 183 of 2552 patients with sepsis (7%) who did not receive timely administration of antibiotics, highlighting the low sensitivity of the ESM in comparison with contemporary clinical practice. The ESM also did not identify 1709 patients with sepsis (67%) despite generating alerts for an ESM score of 6 or higher for 6971 of all 38 455 hospitalized patients (18%), thus creating a large burden of alert fatigue.

Conclusions and Relevance

This external validation cohort study suggests that the ESM has poor discrimination and calibration in predicting the onset of sepsis. The widespread adoption of the ESM despite its poor performance raises fundamental concerns about sepsis management on a national level. ———————— File: 2021-07-21-STAT-News-Epic-sepsis.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “STAT News: A popular algorithm to predict sepsis misses most cases and sends frequent false alarms, study finds” categories:

- Blog

- Research tags:

- Blog

- Press

- sepsis

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

- machine learning

-

STAT News

Casey Ross of STAT News covered our JAMA IM Epic Sepsis Model evaluation paper. Check out the article.

File: 2021-07-21-WIRED-An-Algorithm-That-Predicts-Deadly-Infections-Is-Often-Flawed.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “WIRED: An Algorithm That Predicts Deadly Infections Is Often Flawed” categories:

- Blog

- Research tags:

- Blog

- Press

- sepsis

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

- machine learning

-

WIRED

Tom Simonite of WIRED covered our JAMA IM Epic Sepsis Model evaluation paper. Check out the article.

File: 2021-07-22-The-Verge-A-hospital-algorithm-designed-to-predict-a-deadly-condition-misses-most-cases.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “The Verge: A hospital algorithm designed to predict a deadly condition misses most cases” categories:

- Blog

- Research tags:

- Blog

- Press

- sepsis

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

- machine learning

-

The Verge

Nicole Wetsman of The Verge covered our JAMA IM Epic Sepsis Model evaluation paper. Check out the article.

File: 2021-07-26-The-Washington-Post-A-hospital-algorithm-designed-to-predict-a-deadly-condition-misses-most-cases copy.md Creation Date: — title: “The Washington Post: Sepsis prediction tool used by hospitals misses many cases, study says. Firm that developed the tool disputes those findings.” categories:

- Blog

- Research tags:

- Blog

- Press

- sepsis

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

- machine learning

-

The Washington Post

Erin Blakemore of The Washington Post covered our JAMA IM Epic Sepsis Model evaluation paper. Check out the article.

File: 2021-08-01-Mind-the-Performance-Gap.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Mind the Performance Gap: Dataset Shift During Prospective Validation” categories:

- Blog

- Research tags:

- Blog

- Research

- machine learning

- artificial intelligence

- healthcare

- medicine

-

electronic health record

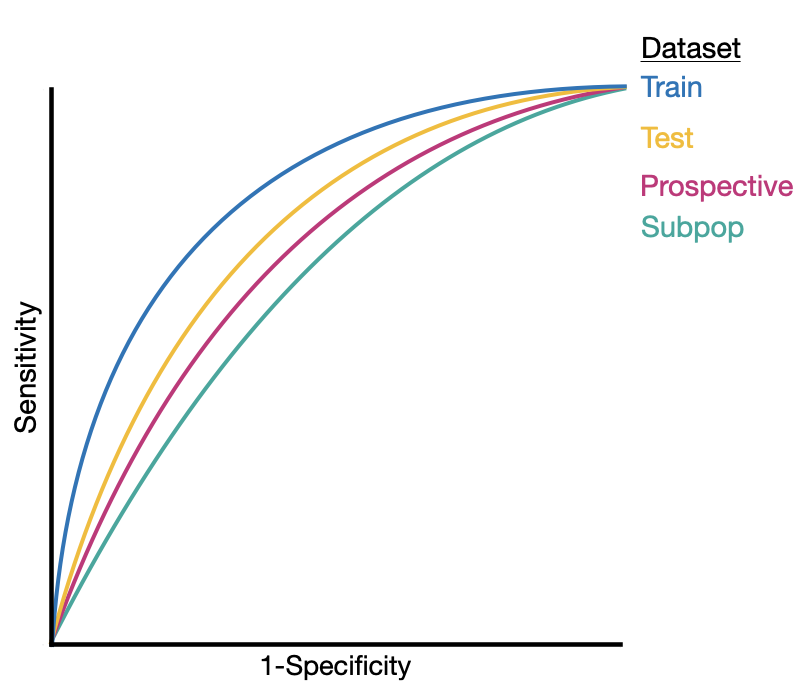

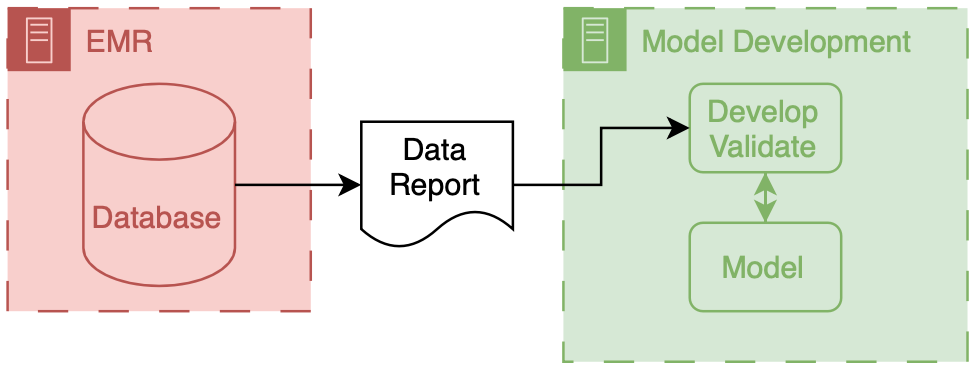

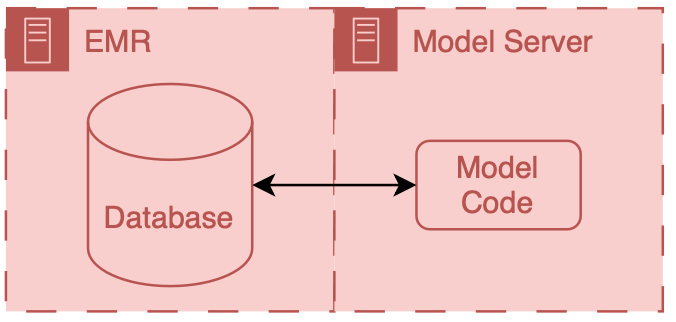

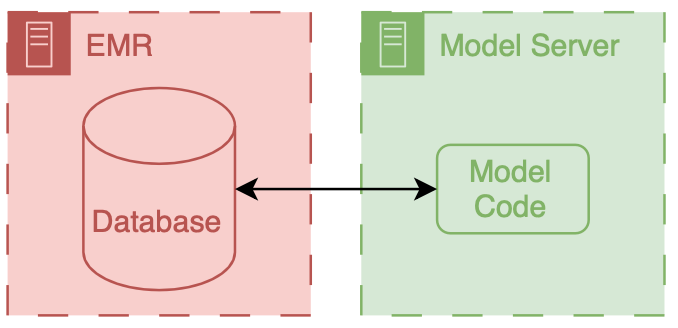

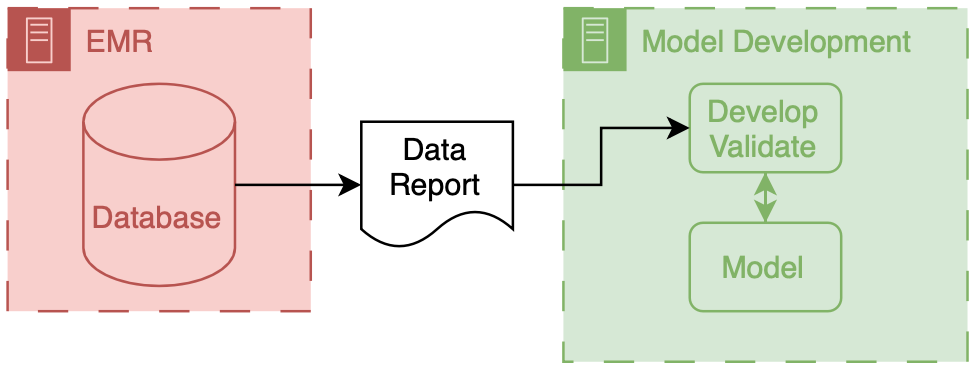

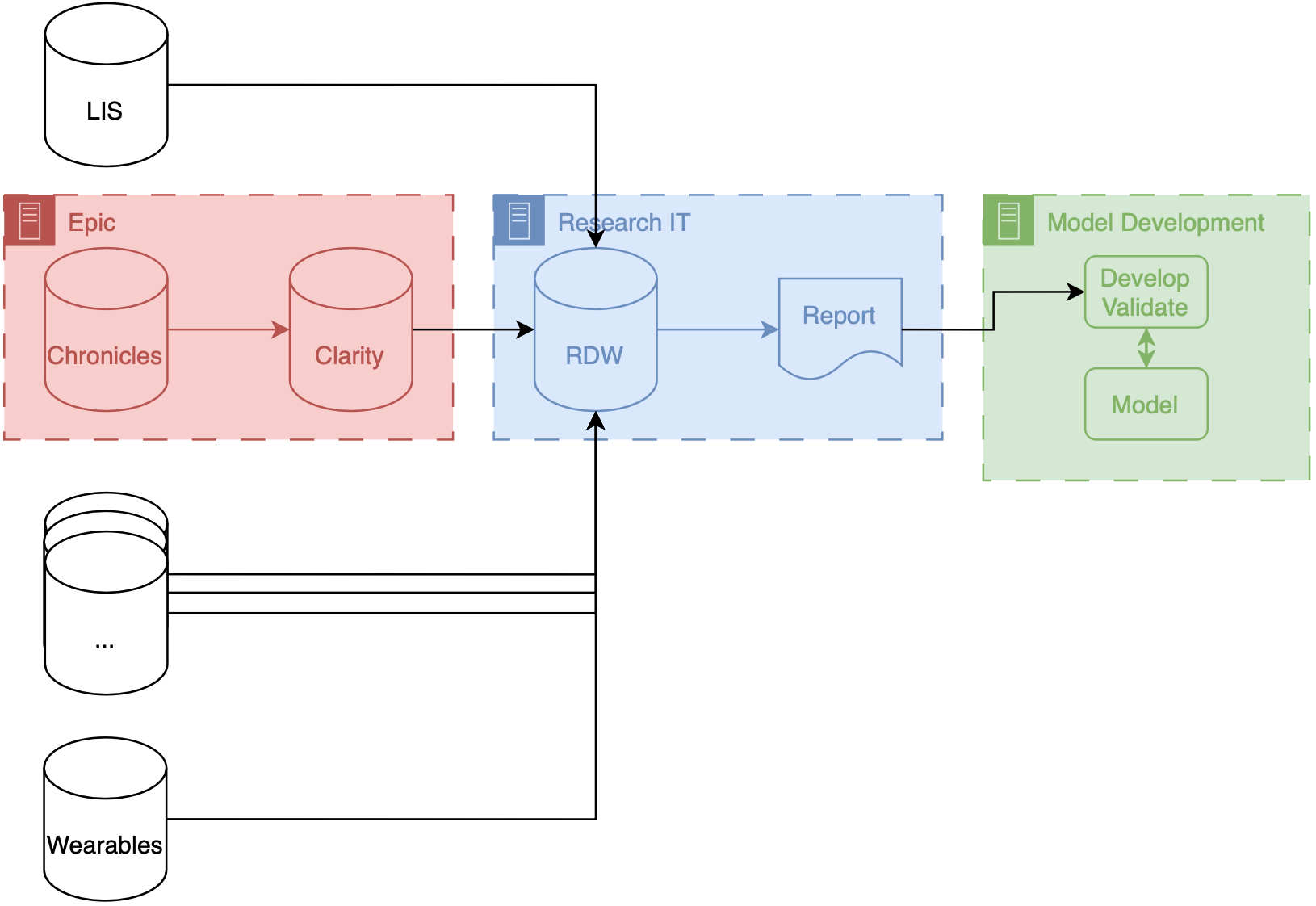

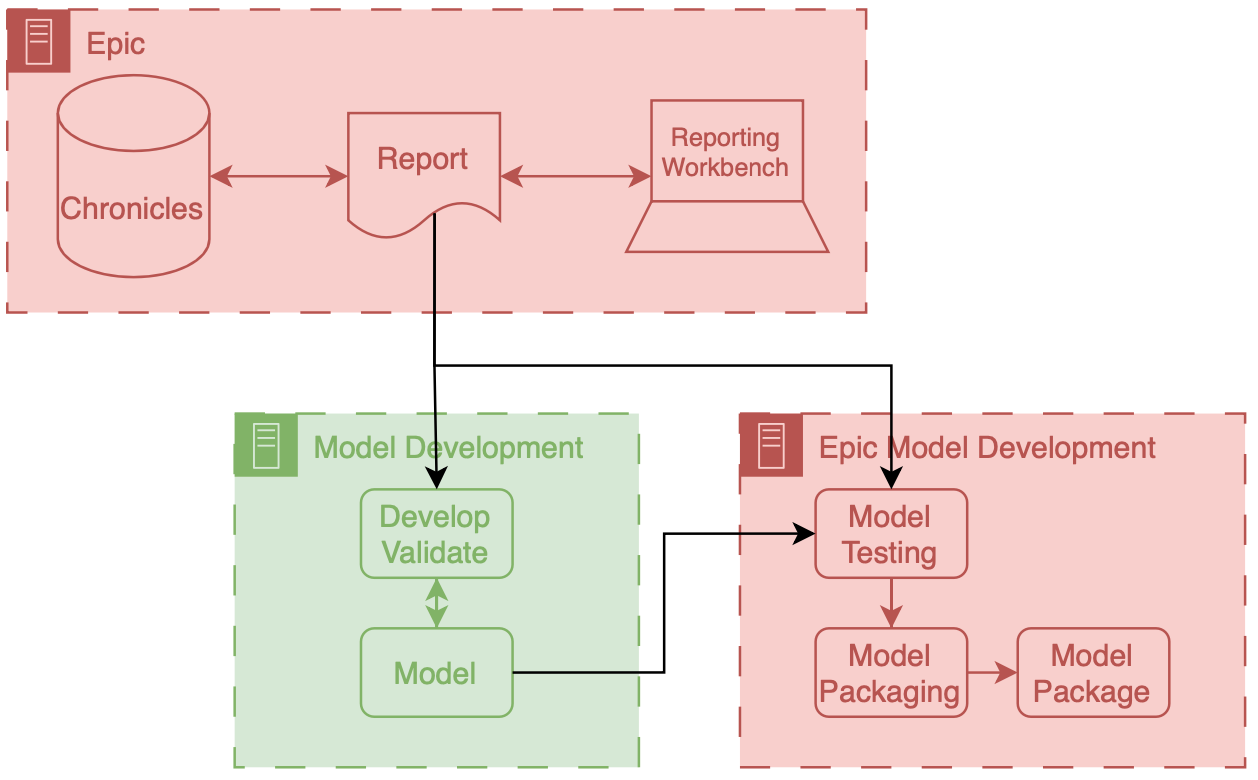

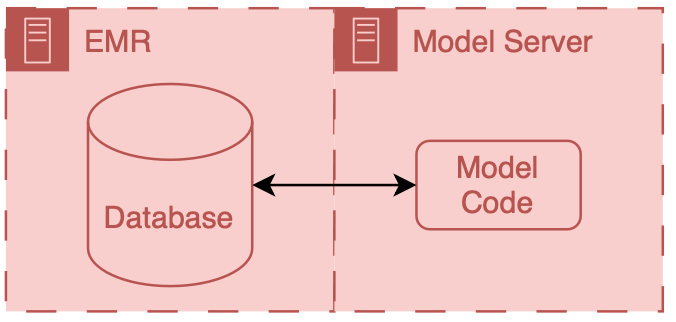

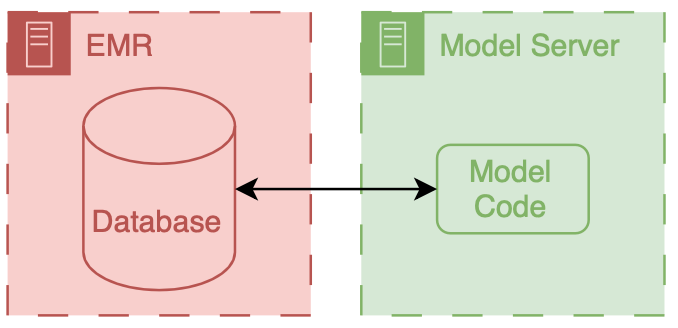

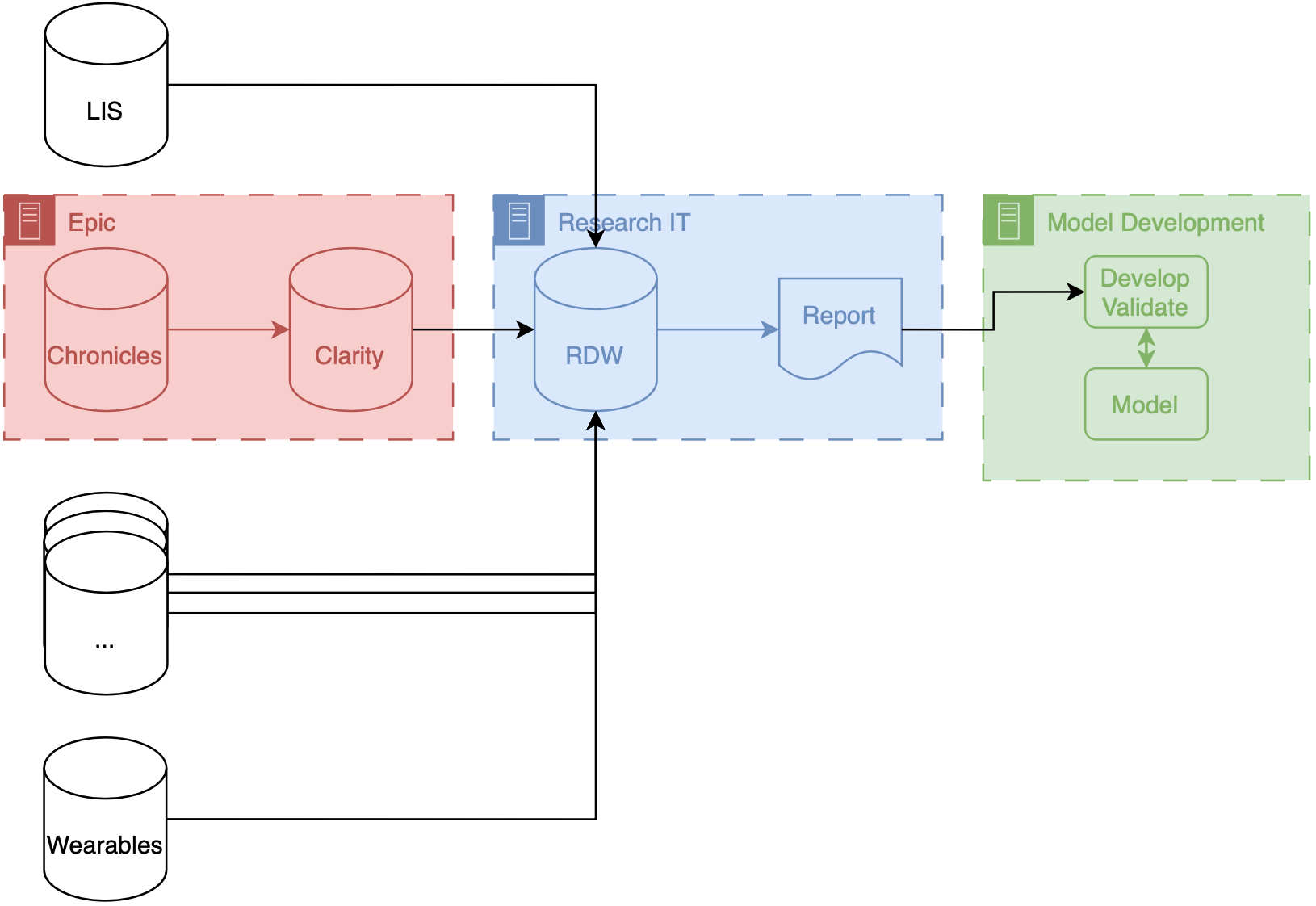

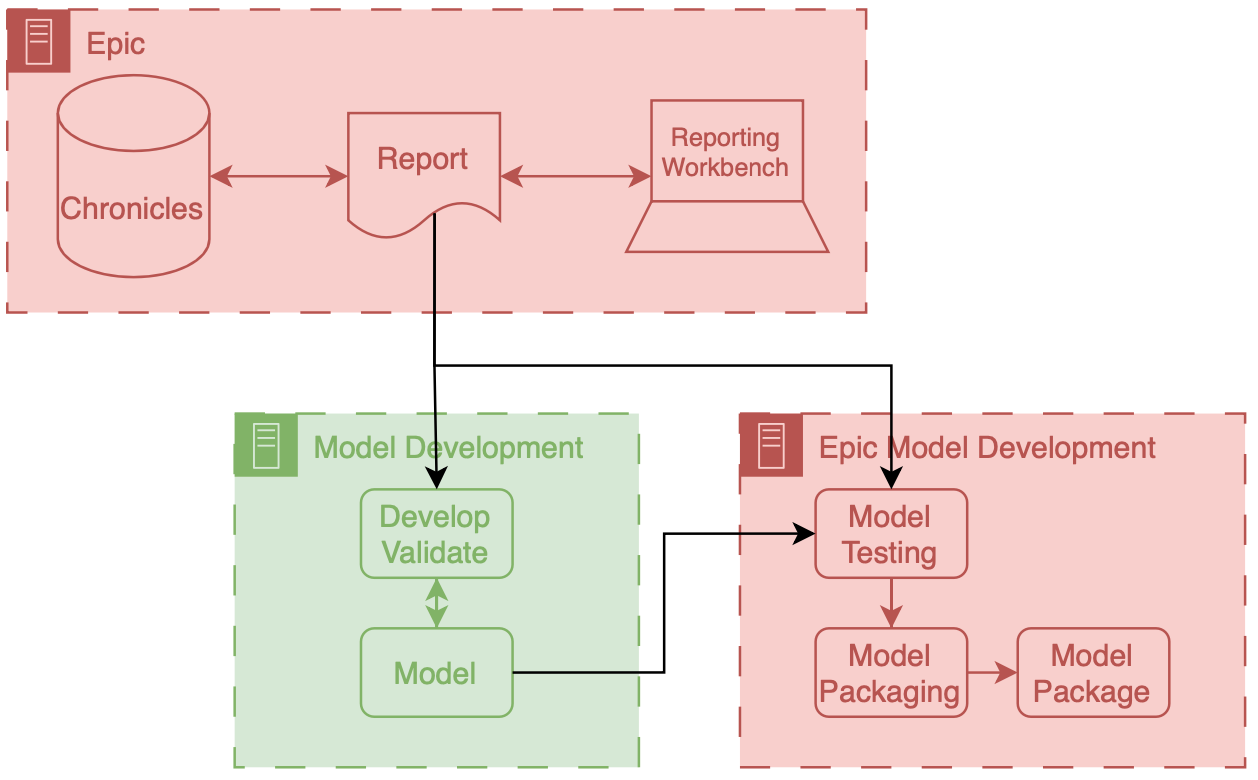

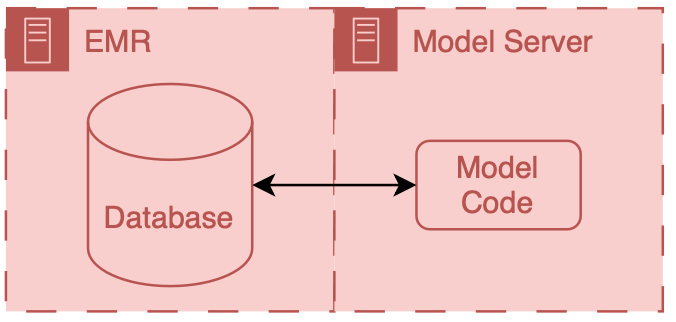

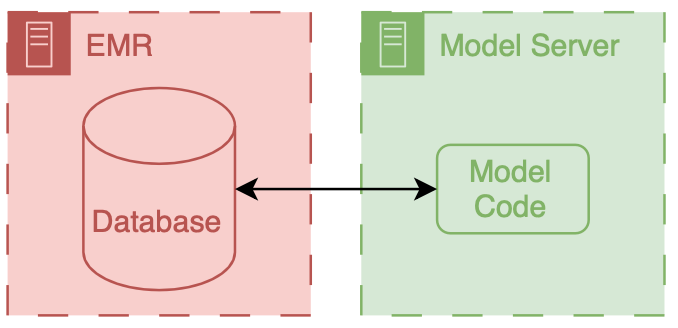

Our 2021 Machine Learning for Healthcare Conference paper! It discusses a special kind of dataset shift that is particularly pervasive and pernicious when developing and implementing ML/AI models for use in healthcare. Here’s a link to the Mind the Performance Gap paper that I authored with Jeeheh Oh, Benjamin Li, Michelle Bochinski, Hyeon Joo, Justin Ortwine, Erica Shenoy, Laraine Washer, Vincent B. Young, Krishna Rao, and Jenna Wiens.

Abstract

Once integrated into clinical care, patient risk stratification models may perform worse com- pared to their retrospective performance. To date, it is widely accepted that performance will degrade over time due to changes in care processes and patient populations. However, the extent to which this occurs is poorly understood, in part because few researchers re- port prospective validation performance. In this study, we compare the 2020-2021 (’20-’21) prospective performance of a patient risk stratification model for predicting healthcare- associated infections to a 2019-2020 (’19-’20) retrospective validation of the same model. We define the difference in retrospective and prospective performance as the performance gap. We estimate how i) “temporal shift”, i.e., changes in clinical workflows and patient populations, and ii) “infrastructure shift”, i.e., changes in access, extraction and transfor- mation of data, both contribute to the performance gap. Applied prospectively to 26,864 hospital encounters during a twelve-month period from July 2020 to June 2021, the model achieved an area under the receiver operating characteristic curve (AUROC) of 0.767 (95% confidence interval (CI): 0.737, 0.801) and a Brier score of 0.189 (95% CI: 0.186, 0.191). Prospective performance decreased slightly compared to ’19-’20 retrospective performance, in which the model achieved an AUROC of 0.778 (95% CI: 0.744, 0.815) and a Brier score of 0.163 (95% CI: 0.161, 0.165). The resulting performance gap was primarily due to in- frastructure shift and not temporal shift. So long as we continue to develop and validate models using data stored in large research data warehouses, we must consider differences in how and when data are accessed, measure how these differences may negatively affect prospective performance, and work to mitigate those differences. ———————— File: 2021-08-01-evaluating-a-widely-implemented-proprietary-deterioration-index-among-inpatients-with-COVID.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Evaluating a Widely Implemented Proprietary Deterioration Index Model among Hospitalized Patients with COVID-19” categories:

- Blog

- Research tags:

- Blog

- Research

- COVID

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

-

machine learning

Annals of the American Thoracic Society. Can be found here.

Abstract

Rationale

The Epic Deterioration Index (EDI) is a proprietary prediction model implemented in over 100 U.S. hospitals that was widely used to support medical decision-making during the coronavirus disease (COVID-19) pandemic. The EDI has not been independently evaluated, and other proprietary models have been shown to be biased against vulnerable populations.

Objectives

To independently evaluate the EDI in hospitalized patients with COVID-19 overall and in disproportionately affected subgroups.

Methods

We studied adult patients admitted with COVID-19 to units other than the intensive care unit at a large academic medical center from March 9 through May 20, 2020. We used the EDI, calculated at 15-minute intervals, to predict a composite outcome of intensive care unit–level care, mechanical ventilation, or in-hospital death. In a subset of patients hospitalized for at least 48 hours, we also evaluated the ability of the EDI to identify patients at low risk of experiencing this composite outcome during their remaining hospitalization.

Results

Among 392 COVID-19 hospitalizations meeting inclusion criteria, 103 (26%) met the composite outcome. The median age of the cohort was 64 (interquartile range, 53–75) with 168 (43%) Black patients and 169 (43%) women. The area under the receiver-operating characteristic curve of the EDI was 0.79 (95% confidence interval, 0.74–0.84). EDI predictions did not differ by race or sex. When exploring clinically relevant thresholds of the EDI, we found patients who met or exceeded an EDI of 68.8 made up 14% of the study cohort and had a 74% probability of experiencing the composite outcome during their hospitalization with a sensitivity of 39% and a median lead time of 24 hours from when this threshold was first exceeded. Among the 286 patients hospitalized for at least 48 hours who had not experienced the composite outcome, 14 (13%) never exceeded an EDI of 37.9, with a negative predictive value of 90% and a sensitivity above this threshold of 91%.

Conclusions

We found the EDI identifies small subsets of high-risk and low-risk patients with COVID-19 with good discrimination, although its clinical use as an early warning system is limited by low sensitivity. These findings highlight the importance of independent evaluation of proprietary models before widespread operational use among patients with COVID-19. ———————— File: 2021-08-05-MLHC-Presentation.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Machine Learning for Healthcare Conference: Characterizing the Performance Gap” categories:

- Blog

- Talk tags:

- medicine

- healthcare

- machine learning

- artificial intelligence

-

operations research

Jeeheh Oh and I presented our work on dataset shift at the 2021 Machine Learning for Healthcare Conference. This talk briefly summarizes our our conference paper.

Abstract

Once integrated into clinical care, patient risk stratification models may perform worse com- pared to their retrospective performance. To date, it is widely accepted that performance will degrade over time due to changes in care processes and patient populations. However, the extent to which this occurs is poorly understood, in part because few researchers re- port prospective validation performance. In this study, we compare the 2020-2021 (’20-’21) prospective performance of a patient risk stratification model for predicting healthcare- associated infections to a 2019-2020 (’19-’20) retrospective validation of the same model. We define the difference in retrospective and prospective performance as the performance gap. We estimate how i) “temporal shift”, i.e., changes in clinical workflows and patient populations, and ii) “infrastructure shift”, i.e., changes in access, extraction and transfor- mation of data, both contribute to the performance gap. Applied prospectively to 26,864 hospital encounters during a twelve-month period from July 2020 to June 2021, the model achieved an area under the receiver operating characteristic curve (AUROC) of 0.767 (95% confidence interval (CI): 0.737, 0.801) and a Brier score of 0.189 (95% CI: 0.186, 0.191). Prospective performance decreased slightly compared to ’19-’20 retrospective performance, in which the model achieved an AUROC of 0.778 (95% CI: 0.744, 0.815) and a Brier score of 0.163 (95% CI: 0.161, 0.165). The resulting performance gap was primarily due to in- frastructure shift and not temporal shift. So long as we continue to develop and validate models using data stored in large research data warehouses, we must consider differences in how and when data are accessed, measure how these differences may negatively affect prospective performance, and work to mitigate those differences. ———————— File: 2021-10-11-CHEPS-Seminar.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “CHEPS Seminar: Engineering Machine Learning for Medicine” categories:

- Blog

- Talk tags:

- medicine

- healthcare

- machine learning

- artificial intelligence

- operations research

- industrial engineering

-

health system engineering

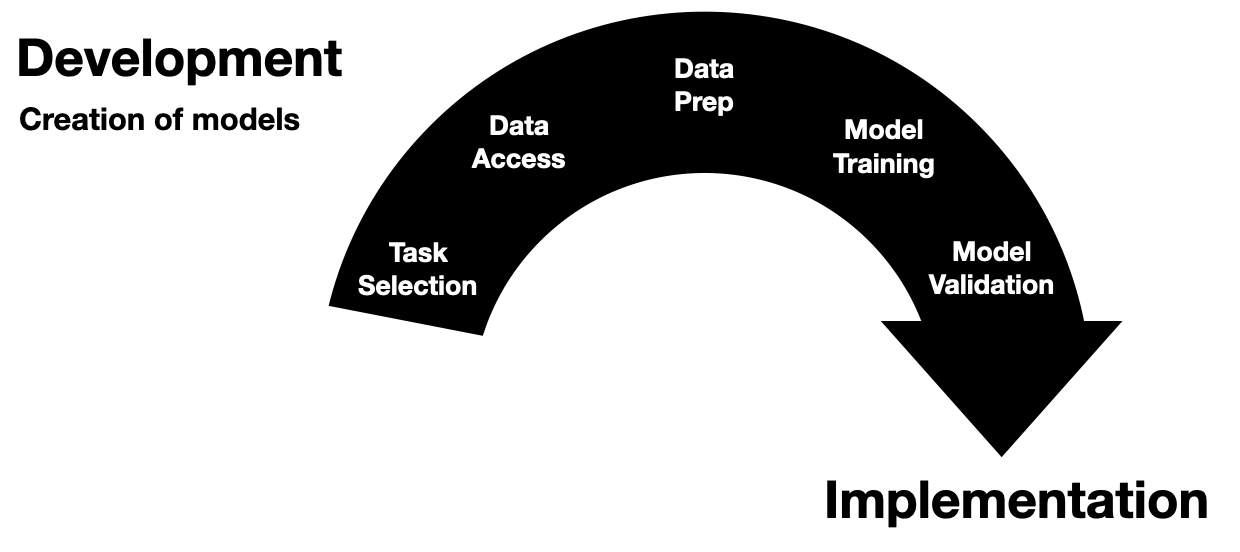

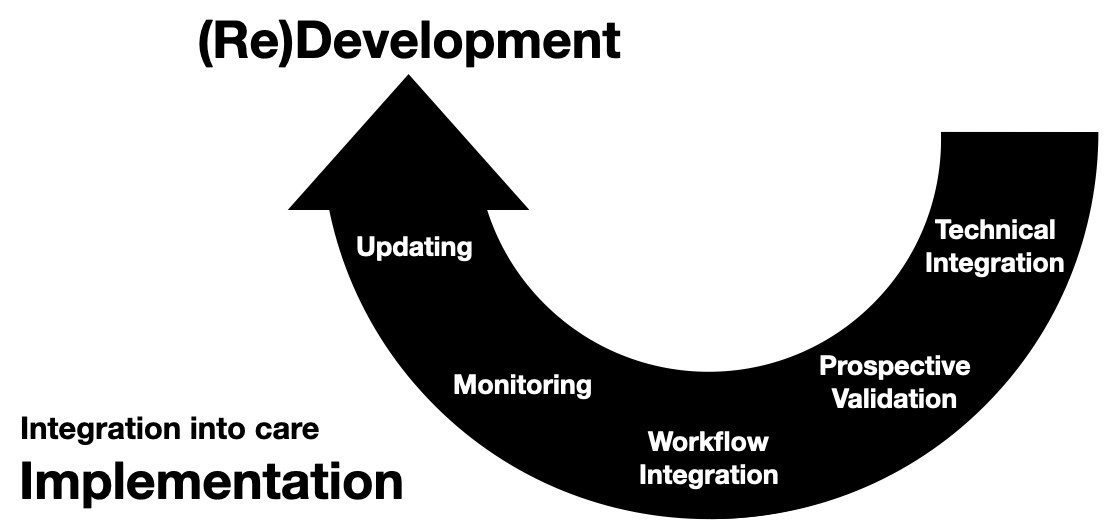

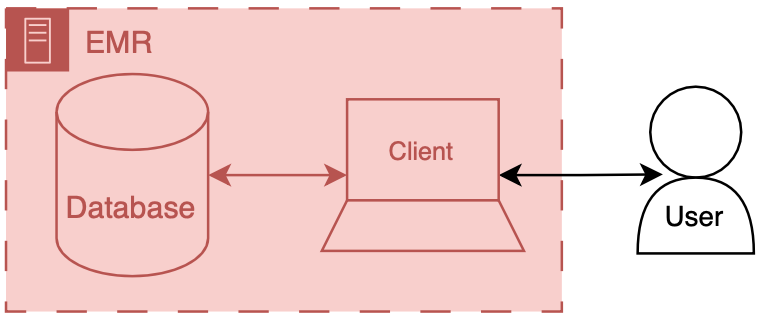

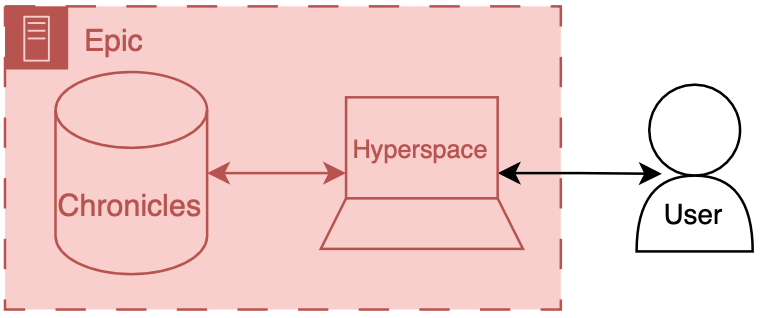

Invited to give a talk for the 2021 University of Michigan Center for Healthcare Engineering and Patient Safety (CHEPS) fall seminar series. Discussed engineering machine learning for medicine. Gave an overview of the whole healthcare AI/ML lifecycle and discussed it is chockablock with cool industrial & health systems engineering problems.

File: 2021-10-31-Using-NLP-to-Automatically-Assess-Feedback-Quality.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Using Natural Language Processing to Automatically Assess Feedback Quality: Findings From 3 Surgical Residencies” categories:

- Blog

- Research tags:

- Blog

- Research

- medicine

- healthcare

- artificial intelligence

- machine learning

- natural language processing

- medical education

-

SIMPL

Academic Medicine. Can be found here.

Abstract

Purpose

Learning is markedly improved with high-quality feedback, yet assuring the quality of feedback is difficult to achieve at scale. Natural language processing (NLP) algorithms may be useful in this context as they can automatically classify large volumes of narrative data. However, it is unknown if NLP models can accurately evaluate surgical trainee feedback. This study evaluated which NLP techniques best classify the quality of surgical trainee formative feedback recorded as part of a workplace assessment.

Method

During the 2016–2017 academic year, the SIMPL (Society for Improving Medical Professional Learning) app was used to record operative performance narrative feedback for residents at 3 university-based general surgery residency training programs. Feedback comments were collected for a sample of residents representing all 5 postgraduate year levels and coded for quality. In May 2019, the coded comments were then used to train NLP models to automatically classify the quality of feedback across 4 categories (effective, mediocre, ineffective, or other). Models included support vector machines (SVM), logistic regression, gradient boosted trees, naive Bayes, and random forests. The primary outcome was mean classification accuracy.

Results

The authors manually coded the quality of 600 recorded feedback comments. Those data were used to train NLP models to automatically classify the quality of feedback across 4 categories. The NLP model using an SVM algorithm yielded a maximum mean accuracy of 0.64 (standard deviation, 0.01). When the classification task was modified to distinguish only high-quality vs low-quality feedback, maximum mean accuracy was 0.83, again with SVM.

Conclusions

To the authors’ knowledge, this is the first study to examine the use of NLP for classifying feedback quality. SVM NLP models demonstrated the ability to automatically classify the quality of surgical trainee evaluations. Larger training datasets would likely further increase accuracy. ———————— File: 2021-11-01-INFORMS-Dynamic-Machine-Learning.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “INFORMS: Dynamic Machine Learning for Medical Practice” categories:

- Blog

- Talk tags:

- medicine

- healthcare

- machine learning

- artificial intelligence

- operations research

- industrial engineering

-

health system engineering

INFORMS conference talk focused on dynamic machine learning for medicine. Based on Joint work with Jon Seymour, MD (Peers Health) and Brian Denton PhD (University of Michigan).

Time is a crucial factor of clinical practice. Our work explores the intersection of time and machine learning (ML) in the context of medicine. This presentation will examine the creation, validation, and deployment of dynamic ML models. We discuss dynamic prediction of future work status for patients who have experienced occupational injuries. Methodologically we cover a framework for dynamic prediction health-state prediction that combines a novel data transformation with an appropriate automatically generated deep learning architecture. These projects expand our understanding of how to effectively train and utilize dynamic machine learning models in the service of advancing health.

File: 2021-11-02-Trust-The-AI-You-Decide.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Forbes: Trust The AI? You Decide” categories:

- Blog

- Research tags:

- Blog

- Press

- sepsis

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

- machine learning

-

Forbes

Arun Shashtri of Forbes covered our JAMA IM Epic Sepsis Model evaluation paper. Check out the article.

File: 2021-11-19-Quantification-of-Sepsis-Model-Alerts-in-24-US-Hospitals-Before-and-During-the-COVID-19-Pandemic.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Quantification of Sepsis Model Alerts in 24 US Hospitals Before and During the COVID-19 Pandemic” categories:

- Blog

- Research tags:

- Blog

- Research

- sepsis

- deterioration index

- Epic

- early warning system

- medicine

- healthcare

- artificial intelligence

-

machine learning

JAMA Network Open. Can be found here.

Download paper.

File: 2021-12-02-NLP-and-Assessment-of-Resident-Feedback-Quality.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Natural Language Processing and Assessment of Resident Feedback Quality” categories:

- Blog

- Research tags:

- Blog

- Research

- medicine

- healthcare

- artificial intelligence

- machine learning

- natural language processing

- medical education

-

SIMPL

Journal of Surgical Education. Can be found here.

Abstract

Objective

To validate the performance of a natural language processing (NLP) model in characterizing the quality of feedback provided to surgical trainees.

Design

Narrative surgical resident feedback transcripts were collected from a large academic institution and classified for quality by trained coders. 75% of classified transcripts were used to train a logistic regression NLP model and 25% were used for testing the model. The NLP model was trained by uploading classified transcripts and tested using unclassified transcripts. The model then classified those transcripts into dichotomized high- and low- quality ratings. Model performance was primarily assessed in terms of accuracy and secondary performance measures including sensitivity, specificity, and area under the receiver operating characteristic curve (AUROC).

Setting

A surgical residency program based in a large academic medical center.

Participants

All surgical residents who received feedback via the Society for Improving Medical Professional Learning smartphone application (SIMPL, Boston, MA) in August 2019.

Results

The model classified the quality (high vs. low) of 2,416 narrative feedback transcripts with an accuracy of 0.83 (95% confidence interval: 0.80, 0.86), sensitivity of 0.37 (0.33, 0.45), specificity of 0.97 (0.96, 0.98), and an area under the receiver operating characteristic curve of 0.86 (0.83, 0.87).

Conclusions

The NLP model classified the quality of operative performance feedback with high accuracy and specificity. NLP offers residency programs the opportunity to efficiently measure feedback quality. This information can be used for feedback improvement efforts and ultimately, the education of surgical trainees. ———————— File: 2021-12-02-NLP-to-Estimate-Clinical-Competency-Committee-Ratings.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Natural Language Processing to Estimate Clinical Competency Committee Ratings” categories:

- Blog

- Research tags:

- Blog

- Research

- medicine

- healthcare

- artificial intelligence

- machine learning

- natural language processing

- medical education

-

SIMPL

Journal of Surgical Education. Can be found here.

Abstract

Objective

Residency program faculty participate in clinical competency committee (CCC) meetings, which are designed to evaluate residents’ performance and aid in the development of individualized learning plans. In preparation for the CCC meetings, faculty members synthesize performance information from a variety of sources. Natural language processing (NLP), a form of artificial intelligence, might facilitate these complex holistic reviews. However, there is little research involving the application of this technology to resident performance assessments. With this study, we examine whether NLP can be used to estimate CCC ratings.

Design

We analyzed end-of-rotation assessments and CCC assessments for all surgical residents who trained at one institution between 2014 and 2018. We created models of end-of-rotation assessment ratings and text to predict dichotomized CCC assessment ratings for 16 Accreditation Council for Graduate Medical Education (ACGME) Milestones. We compared the performance of models with and without predictors derived from NLP of end-of-rotation assessment text.

Results

We analyzed 594 end-of-rotation assessments and 97 CCC assessments for 24 general surgery residents. The mean (standard deviation) for area under the receiver operating characteristic curve (AUC) was 0.84 (0.05) for models with only non-NLP predictors, 0.83 (0.06) for models with only NLP predictors, and 0.87 (0.05) for models with both NLP and non-NLP predictors.

Conclusions

NLP can identify language correlated with specific ACGME Milestone ratings. In preparation for CCC meetings, faculty could use information automatically extracted from text to focus attention on residents who might benefit from additional support and guide the development of educational interventions. ———————— File: 2021-12-04-Comparative-Assessment-of-a-Machine-Learning-Model-and-Rectal-Swab-Surveillance-to-Predict-Hospital-Onset-Clostridioides-difficile.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Comparative Assessment of a Machine Learning Model and Rectal Swab Surveillance to Predict Hospital Onset Clostridioides difficile” categories:

- Blog

- Research tags:

- Blog

- Research

- Clostridioides difficile

- infectious disease

- early warning system

- medicine

- healthcare

- artificial intelligence

-

machine learning

IDWeek Abstract. Can be found here.

Download paper.

File: 2021-12-07-IOE-Research-Spotlight.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “IOE Research Spotlight” categories:

- Blog

- Talk tags:

- medicine

- healthcare

- machine learning

- artificial intelligence

- operations research

- industrial engineering

-

health system engineering

Shared an overview of my research during the 2021 University of Michigan Department of Industrial and Operations Engineering recruiting weekend.

File: 2021-12-09-Precision-Health-Webinar.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Precision Health Webinar: What Clinicians Need to Know when Using AI” categories:

- Blog

- Talk tags:

- medicine

- machine learning

-

artificial intelligence

Panel discussion on what is important for clinicians to know and how confident they can be when using these AI tools. Conversation with Drs. Rada Mihalcea, Max Spadafore, and Cornelius James.

File: 2022-01-07-hello-world.md Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000” — title: “Hello, World!” categories:

- Blog tags:

-

Blog

Hello, World!

Welcome to Ötleş Notes! It’s a blog by me (Erkin Ötleş).

For a little background: I am a Medical Scientist Training Program Fellow at the University of Michigan. What does that mean in English? It means I am a very silly person who decided to go to school forever in order to study medicine (MD) and engineering (PhD in industrial and operations engineering). Generally, I am fascinated by the intersection of engineering and medicine. I strongly believe that both fields have a lot to learn from one another. While working between the two presents challenges, I am genuinely grateful to learn from wonderful mentors and colleagues in both fields.

As I come across interesting topics that pertain to medicine or engineering I’ll try to share them here along with my perspective. I won’t make any guarantees regarding posting frequency or topics. However, I will to make every effort to cite original sources and be as factual as possible.

Ultimately this is a project for myself: 1) to help strengthen my written communication skills and 2) allow me to explore a broader space of ideas. If you happen to get something out of it too in the meantime that’s a wonderful byproduct.

If you have ideas about my ideas feel free to reach out to me on twitter (@eotles) or write me an email.

Cheers,

Erkin

Go ÖN Home

————————

File: 2022-01-10-solving-wordle.md

Creation Date: “Fri, 6 Mar 2026 19:13:35 +0000”

—

title: “Solving Wordle”

categories:

- Blog tags:

- wordle

- decision science

- operations research

- optimization

- games

- artificial intelligence

-

machine learning

Let’s talk about Wordle. [1] You, like me, might have been drawn into this game recently, courtesy of those yellow and green squares on twitter. The rules are simple, you get 6 attempts to guess the 5 letter word. After every attempt you get feedback in the form of the colored squares around your letters. Grey means this character isn’t used at all. Yellow means that the character is used, but in a different position. Finally, green means you nailed the character to (one of) the right position(s). Here’s an example of a played game:

A valiant wordle attempt by J.B. Cheadle (January 10th 2022)

It’s pretty fun to play, although wracking your brain for 5 letter words can be annoying, especially since you are not allowed to guess words that aren’t real words (e.g., you can’t use AEIOU). Once I got the hang of the game’s mechanics my natural inclination was to not enjoy the once daily word guessing diversion, but was to find a way to “solve wordle”.

Now, what does it mean to “solve wordle”? Maybe you would like to start with a really good guess? Maybe you would like to guarantee that you win the game (i.e., guess the right word by your sixth try)? Or perhaps, you’d like to win the game and get the most amount of greens or yellow on the way? “Solving” is a subjective and probably depends on your preferences.

Due to this subjectivity I think there’s couple valid ways to tackle wordle. If you have a strong preference for one type of solution you might be able to express that directly and then solve the game in order to get the optimal way to play. I’m going to try to avoid the O-word because: 1) I don’t know what you’d like to optimize for and 2) these approaches below don’t solve for the true optimal solution (they are heuristics).

The solution strategies I’ve explored thus far can be broken down into two major categories. The first set of strategies are trying to find really good first words to start with (First Word) and the second set are finding strategies that can be used to pick good words throughout the course of the game in response to responses received from guesses (Gameplay).

Let’s start with the First Words strategies: there are two first word strategies that can be employed based on how you’d like to start your game. First Word - Common Characters: ideal if you’d like to start your game using words that have the most common characters with all the solution words. Think of this as trying to maximize the number of yellow characters that you get on the first try.

-

First Word - Right Character in Right Position: ideal if you’d like to start the game using words that have the highest likelihood of having the right characters in the right position. This would yield the most number of green characters.

Rank Solution Words Usable Words 1st later, alter, alert oater, orate, roate 2nd sonic, scion lysin 2nd pudgy chump :) -

First Word - Right Character in Right Position: ideal if you’d like to start the game using words that have the highest likelihood of having the right characters in the right position. This would yield the most number of green characters.

Rank Solution (& Usable) Words 1st slate 2nd crony 2nd build

Note on solution word vs. usable words. Wordle has two sets of words, solution words and other words. Other words are never the correct answer but can be used as a guess. There’s a chance that other words can be used to get a lot of yellows, despite never being the correct answer. So I created a list of usable words that combined the solution words and the other words. Notice that the First Word - Common Characters strategy has two lists. That’s because there are other words like “oater” that are more likely to produce yellows than the best solution word “later”. This isn’t the case for the First Word - Right Character in Right Position, as it produces the same results for both sets of words.

You might also observe that there are several sets of words in terms of 1st, 2nd, and 3rd. If you wanted you could use these strategies over several rounds to build up your knowledge. However, these strategies don’t take into account the feedback that you get from the game. So there may be better ways to play the game that take into account what kind of results you get after you put in a guess.